Week 9 [Mar 18]

Tutorials

Come prepared for the tutorial:

- This week's tutorial involves discussing some theory questions. There are 5 questions. Please divide the questions among yourselves and come prepared to answer them during the tutorial. We will not be able to finish all five questions during the tutorial unless you come prepared.

[W9.1] Design Principles: Basics

Abstraction

Can explain abstraction

Abstraction is a technique for dealing with complexity. It works by establishing a level of complexity we are interested in, and suppressing the more complex details below that level.

The guiding principle of abstraction is that only details that are relevant to the current perspective or the task at hand needs to be considered. As most programs are written to solve complex problems involving large amounts of intricate details, it is impossible to deal with all these details at the same time. That is where abstraction can help.

Ignoring lower level data items and thinking in terms of bigger entities is called data abstraction.

Within a certain software component, we might deal with a user data type, while ignoring the details contained in the user data item such as name, and date of birth. These details have been ‘abstracted away’ as they do not affect the task of that software component.

Control abstraction abstracts away details of the actual control flow to focus on tasks at a simplified level.

print(“Hello”) is an abstraction of the actual output mechanism within the computer.

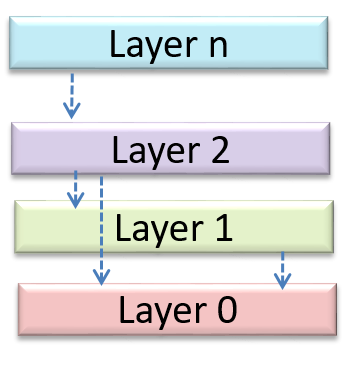

Abstraction can be applied repeatedly to obtain progressively higher levels of abstractions.

An example of different levels of data abstraction: a File is a data item that is at a higher level than an array and an array is at a higher level than a bit.

An example of different levels of control abstraction: execute(Game) is at a higher level than print(Char) which is at a higher than an Assembly language instruction MOV.

Abstraction is a general concept that is not limited to just data or control abstractions.

Some more general examples of abstraction:

- An OOP class is an abstraction over related data and behaviors.

- An architecture is a higher-level abstraction of the design of a software.

- Models (e.g., UML models) are abstractions of some aspect of reality.

Coupling

Can explain coupling

Coupling is a measure of the degree of dependence between components, classes, methods, etc. Low coupling indicates that a component is less dependent on other components. High coupling (aka tight coupling or strong coupling) is discouraged due to the following disadvantages:

- Maintenance is harder because a change in one module could cause changes in other modules coupled to it (i.e. a ripple effect).

- Integration is harder because multiple components coupled with each other have to be integrated at the same time.

- Testing and reuse of the module is harder due to its dependence on other modules.

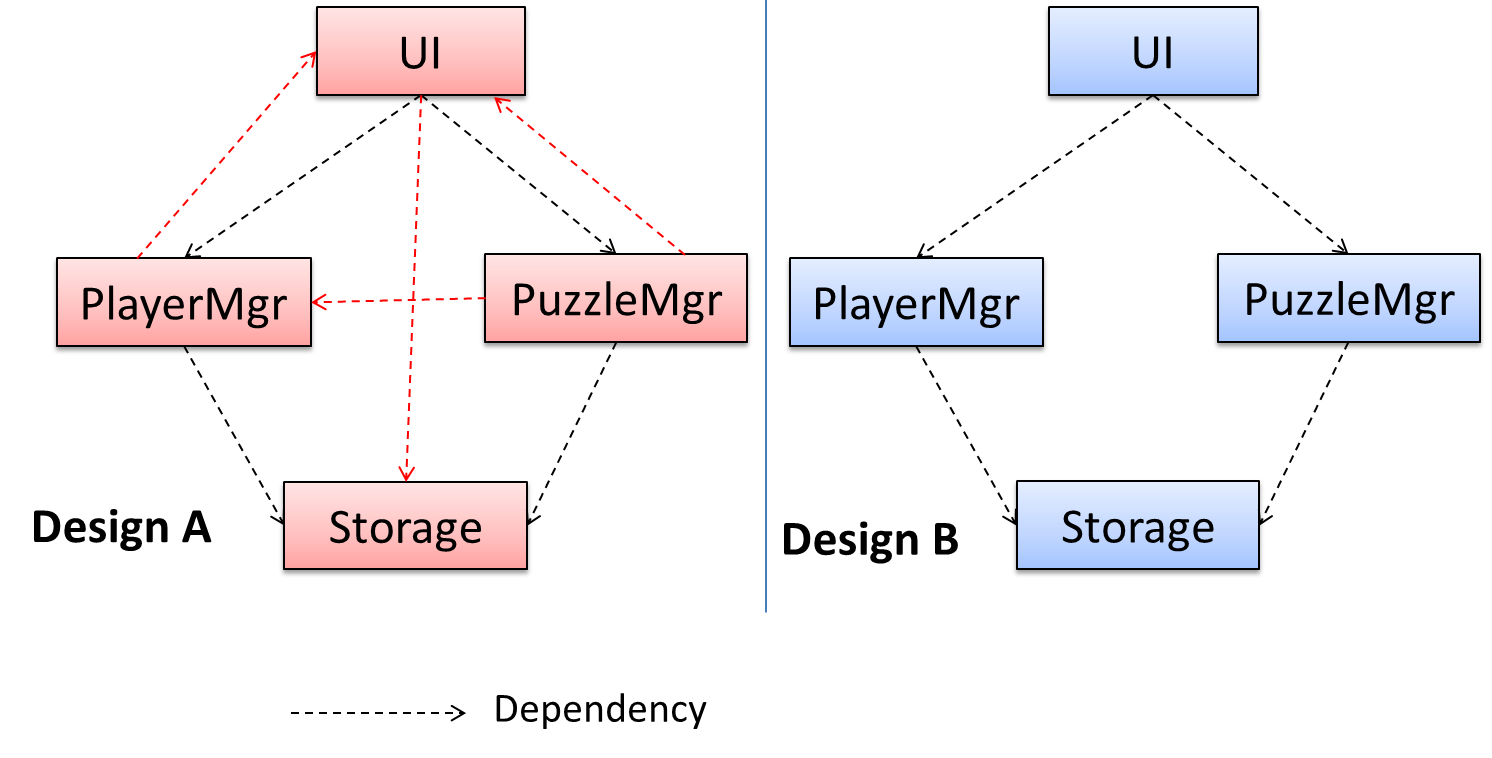

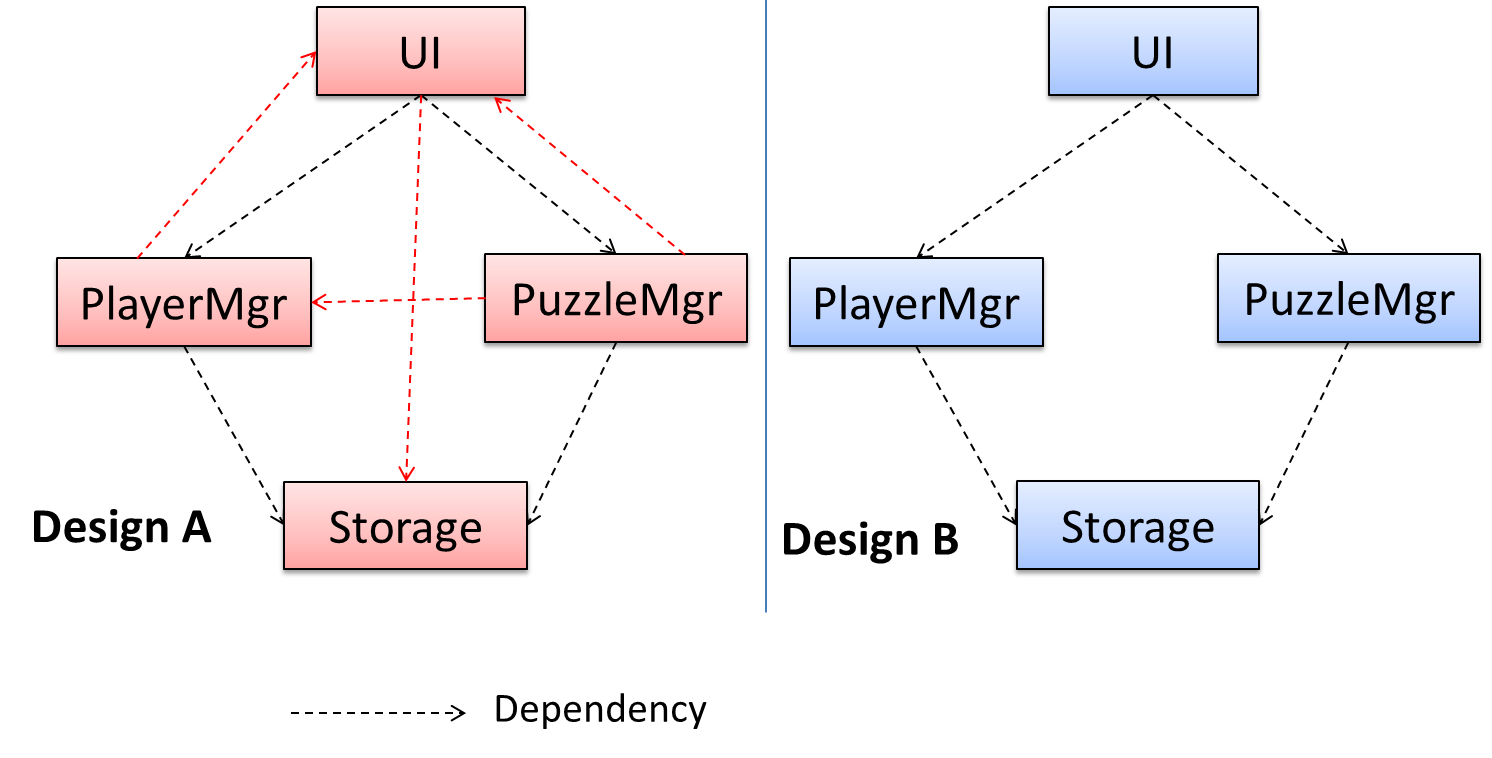

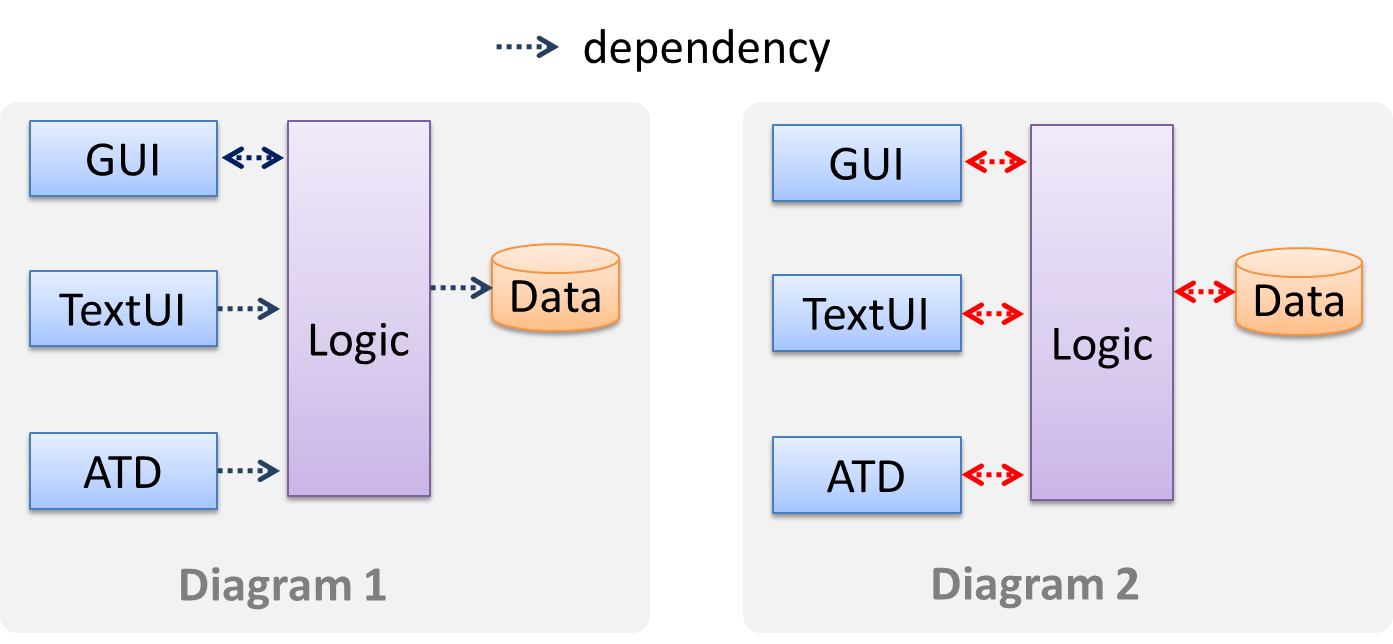

In the example below, design A appears to have a more coupling between the components than design B.

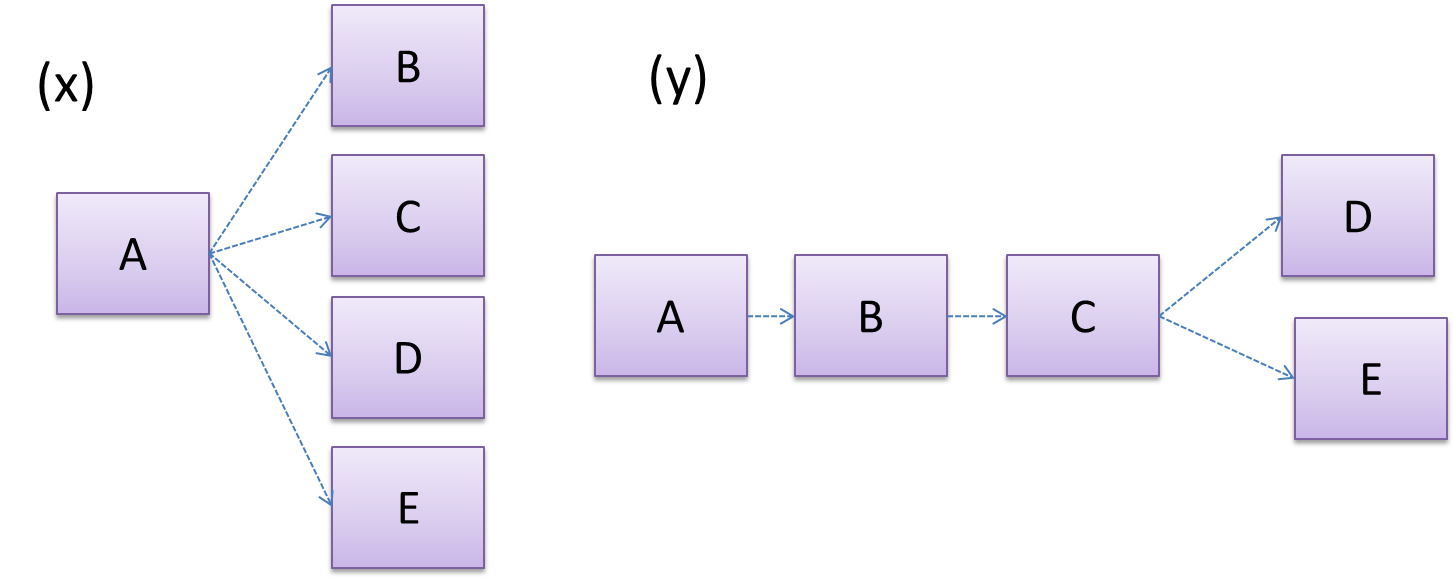

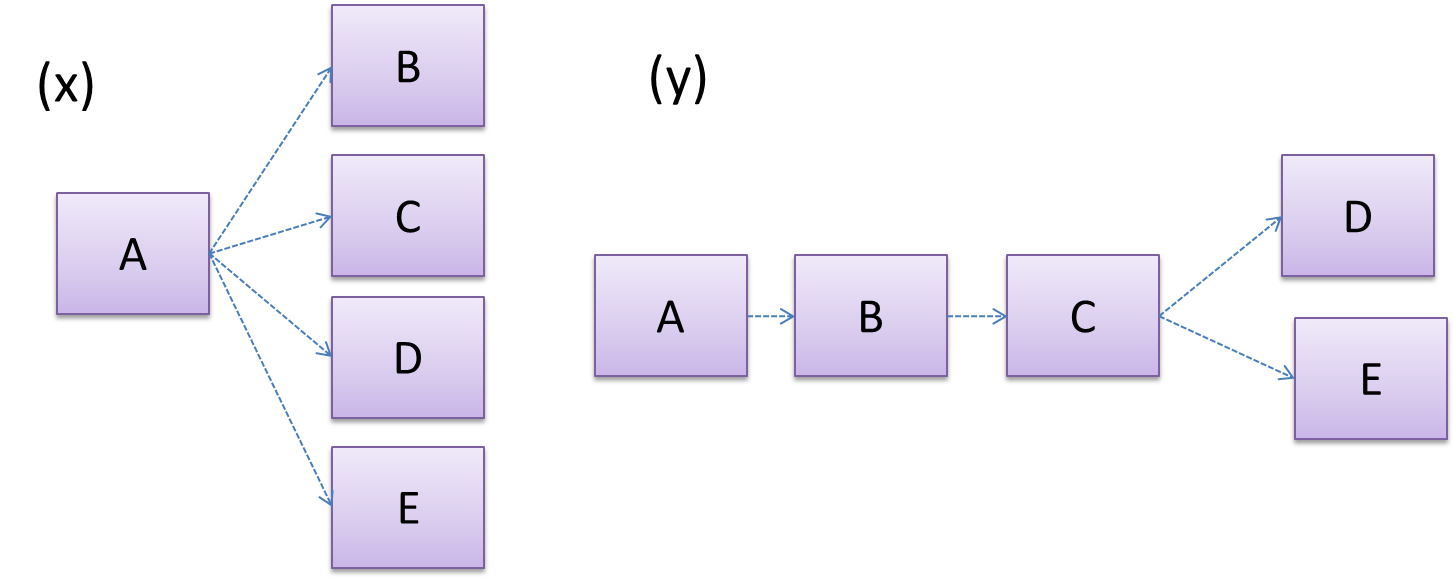

Discuss the coupling levels of alternative designs x and y.

Overall coupling levels in x and y seem to be similar (neither has more dependencies than the other). (Note that the number of dependency links is not a definitive measure of the level of coupling. Some links may be stronger than the others.). However, in x, A is highly-coupled to the rest of the system while B, C, D, and E are standalone (do not depend on anything else). In y, no component is as highly-coupled as A of x. However, only D and E are standalone.

Explain the link (if any) between regressions and coupling.

When the system is highly-coupled, the risk of regressions is higher too e.g. when component A is modified, all components ‘coupled’ to component A risk ‘unintended behavioral changes’.

Discuss the relationship between coupling and

Coupling decreases testability because if the

Choose the correct statements.

- a. As coupling increases, testability decreases.

- b. As coupling increases, the risk of regression increases.

- c. As coupling increases, the value of automated regression testing increases.

- d. As coupling increases, integration becomes easier as everything is connected together.

- e. As coupling increases, maintainability decreases.

(a)(b)(c)(d)(e)

Explanation: High coupling means either more components require to be integrated at once in a big-bang fashion (increasing the risk of things going wrong) or more drivers and stubs are required when integrating incrementally.

Can reduce coupling

X is coupled to Y if a change to Y can potentially require a change in X.

If Foo class calls the method Bar#read(), Foo is coupled to Bar because a change to Bar can potentially (but not always) require a change in the Foo class e.g. if the signature of the Bar#read() is changed, Foo needs to change as well, but a change to the Bar#write() method may not require a change in the Foo class because Foo does not call Bar#write().

class Foo{

...

new Bar().read();

...

}

class Bar{

void read(){

...

}

void write(){

...

}

}

Some examples of coupling: A is coupled to B if,

Ahas access to the internal structure ofB(this results in a very high level of coupling)AandBdepend on the same global variableAcallsBAreceives an object ofBas a parameter or a return valueAinherits fromBAandBare required to follow the same data format or communication protocol

Which of these indicate a coupling between components A and B?

- a. component A has access to internal structure of component B.

- b. component A and B are written by the same developer.

- c. component A calls component B.

- d. component A receives an object of component B as a parameter.

- e. component A inherits from component B.

- f. components A and B have to follow the same data format or communication protocol.

(a)(b)(c)(d)(e)(f)

Explanation: Being written by the same developer does not imply a coupling.

Can identify types of coupling

Some examples of different coupling types:

- Content coupling: one module modifies or relies on the internal workings of another module e.g., accessing local data of another module

- Common/Global coupling: two modules share the same global data

- Control coupling: one module controlling the flow of another, by passing it information on what to do e.g., passing a flag

- Data coupling: one module sharing data with another module e.g. via passing parameters

- External coupling: two modules share an externally imposed convention e.g., data formats, communication protocols, device interfaces.

- Subclass coupling: a class inherits from another class. Note that a child class is coupled to the parent class but not the other way around.

- Temporal coupling: two actions are bundled together just because they happen to occur at the same time e.g. extracting a contiguous block of code as a method although the code block contains statements unrelated to each other

Cohesion

Can explain cohesion

Cohesion is a measure of how strongly-related and focused the various responsibilities of a component are. A highly-cohesive component keeps related functionalities together while keeping out all other unrelated things.

Higher cohesion is better. Disadvantages of low cohesion (aka weak cohesion):

- Lowers the understandability of modules as it is difficult to express module functionalities at a higher level.

- Lowers maintainability because a module can be modified due to unrelated causes (reason: the module contains code unrelated to each other) or many many modules may need to be modified to achieve a small change in behavior (reason: because the code realated to that change is not localized to a single module).

- Lowers reusability of modules because they do not represent logical units of functionality.

Can increase cohesion

Cohesion can be present in many forms. Some examples:

- Code related to a single concept is kept together, e.g. the

Studentcomponent handles everything related to students. - Code that is invoked close together in time is kept together, e.g. all code related to initializing the system is kept together.

- Code that manipulates the same data structure is kept together, e.g. the

GameArchivecomponent handles everything related to the storage and retrieval of game sessions.

Suppose a Payroll application contains a class that deals with writing data to the database. If the class include some code to show an error dialog to the user if the database is unreachable, that class is not cohesive because it seems to be interacting with the user as well as the database.

Compare the cohesion of the following two versions of the EmailMessage class. Which one is more cohesive and why?

// version-1

class EmailMessage {

private String sendTo;

private String subject;

private String message;

public EmailMessage(String sendTo, String subject, String message) {

this.sendTo = sendTo;

this.subject = subject;

this.message = message;

}

public void sendMessage() {

// sends message using sendTo, subject and message

}

}

// version-2

class EmailMessage {

private String sendTo;

private String subject;

private String message;

private String username;

public EmailMessage(String sendTo, String subject, String message) {

this.sendTo = sendTo;

this.subject = subject;

this.message = message;

}

public void sendMessage() {

// sends message using sendTo, subject and message

}

public void login(String username, String password) {

this.username = username;

// code to login

}

}

Version 2 is less cohesive.

Explanation: Version 2 is handling functionality related to login, which is not directly related to the concept of ‘email message’ that the class is supposed to represent. On a related note, we can improve the cohesion of both versions by removing the sendMessage functionality. Although sending message is related to emails, this class is supposed to represent an email message, not an email server.

Some other principles

Can explain single responsibility principle

Single Responsibility Principle (SRP): A class should have one, and only one, reason to change. -- Robert C. Martin

If a class has only one responsibility, it needs to change only when there is a change to that responsibility.

Consider a TextUi class that does parsing of the user commands as well as interacting with the user. That class needs to change when the formatting of the UI changes as well as when the syntax of the user command changes. Hence, such a class does not follow the SRP.

Gather together the things that change for the same reasons. Separate those things that change for different reasons. ―Agile Software Development, Principles, Patterns, and Practices by Robert C. Martin

- An explanation of the SRP from www.oodesign.com

- Another explanation (more detailed) by Patkos Csaba

- A book chapter on SRP - A book chapter on SRP, written by the father of the principle itself Robert C Martin.

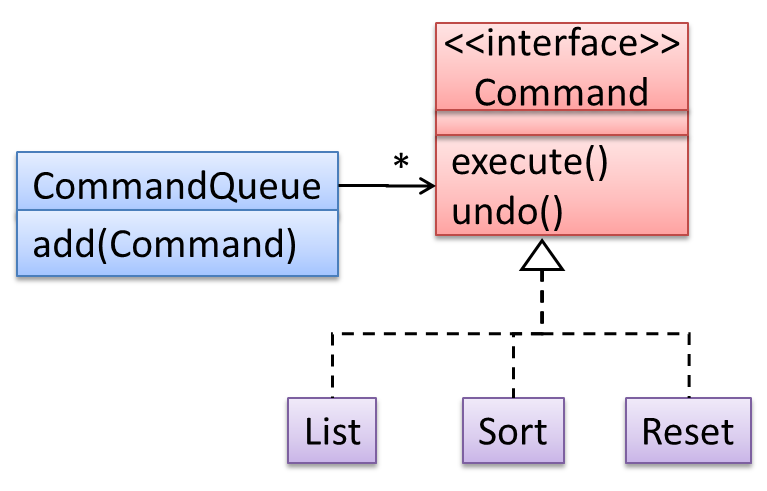

Can explain open-closed principle (OCP)

The Open-Close Principle aims to make a code entity easy to adapt and reuse without needing to modify the code entity itself.

Open-Closed Principle (OCP): A module should be open for extension but closed for modification. That is, modules should be written so that they can be extended, without requiring them to be modified. -- proposed by Bertrand Meyer

In object-oriented programming, OCP can be achieved in various ways. This often requires separating the specification (i.e. interface) of a module from its implementation.

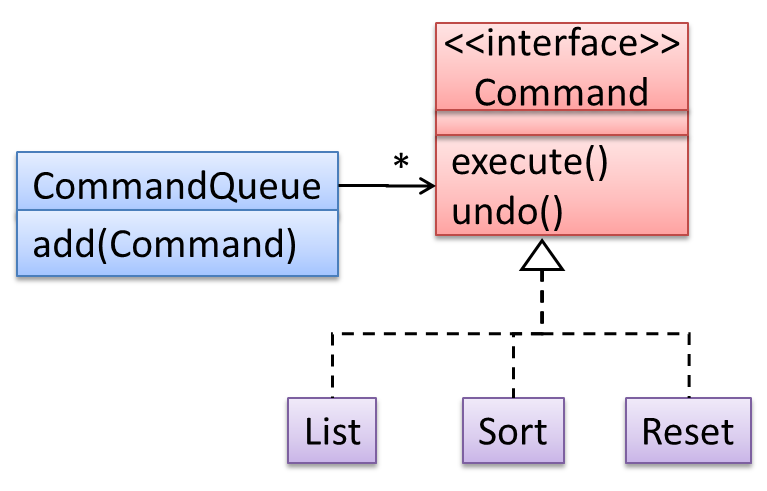

In the design given below, the behavior of the CommandQueue class can be altered by adding more concrete Command subclasses. For example, by including a Delete class alongside List, Sort, and Reset, the CommandQueue can now perform delete commands without modifying its code at all. That is, its behavior was extended without having to modify its code. Hence, it was open to extensions, but closed to modification.

The behavior of a Java generic class can be altered by passing it a different class as a parameter. In the code below, the ArrayList class behaves as a container of Students in one instance and as a container of Admin objects in the other instance, without having to change its code. That is, the behavior of the ArrayList class is extended without modifying its code.

ArrayList students = new ArrayList< Student >();

ArrayList admins = new ArrayList< Admin >();

Which of these is closest to the meaning of the open-closed principle?

(a)

Explanation: Please refer the handout for the definition of OCP.

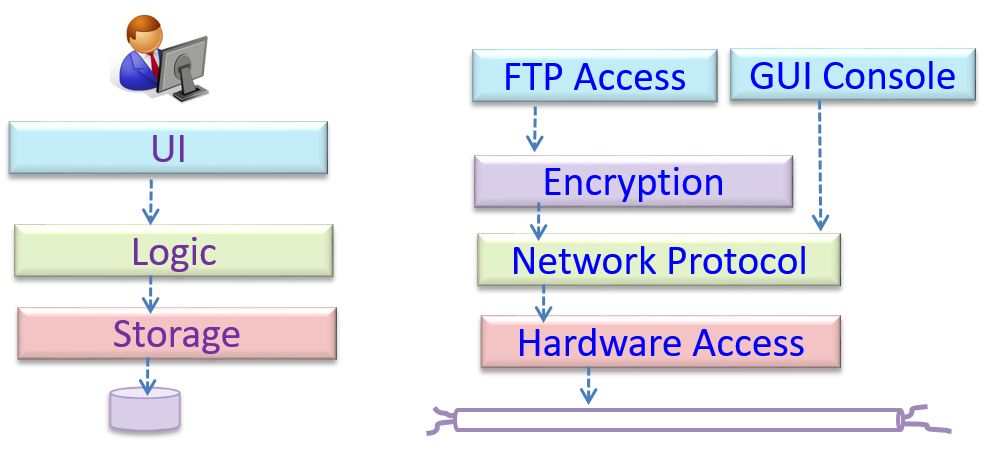

Can explain separation of concerns principle

Separation of Concerns Principle (SoC): To achieve better modularity, separate the code into distinct sections, such that each section addresses a separate concern. -- Proposed by Edsger W. Dijkstra

A concern in this context is a set of information that affects the code of a computer program.

Examples for concerns:

- A specific feature, such as the code related to

add employeefeature - A specific aspect, such as the code related to

persistenceorsecurity - A specific entity, such as the code related to the

Employeeentity

Applying

If the code related to persistence is separated from the code related to security, a change to how the data are persisted will not need changes to how the security is implemented.

This principle can be applied at the class level, as well as on higher levels.

The

This principle should lead to higher

Design → Design Fundamentals → Coupling →

Coupling is a measure of the degree of dependence between components, classes, methods, etc. Low coupling indicates that a component is less dependent on other components. High coupling (aka tight coupling or strong coupling) is discouraged due to the following disadvantages:

- Maintenance is harder because a change in one module could cause changes in other modules coupled to it (i.e. a ripple effect).

- Integration is harder because multiple components coupled with each other have to be integrated at the same time.

- Testing and reuse of the module is harder due to its dependence on other modules.

In the example below, design A appears to have a more coupling between the components than design B.

Discuss the coupling levels of alternative designs x and y.

Overall coupling levels in x and y seem to be similar (neither has more dependencies than the other). (Note that the number of dependency links is not a definitive measure of the level of coupling. Some links may be stronger than the others.). However, in x, A is highly-coupled to the rest of the system while B, C, D, and E are standalone (do not depend on anything else). In y, no component is as highly-coupled as A of x. However, only D and E are standalone.

Explain the link (if any) between regressions and coupling.

When the system is highly-coupled, the risk of regressions is higher too e.g. when component A is modified, all components ‘coupled’ to component A risk ‘unintended behavioral changes’.

Discuss the relationship between coupling and

Coupling decreases testability because if the

Choose the correct statements.

- a. As coupling increases, testability decreases.

- b. As coupling increases, the risk of regression increases.

- c. As coupling increases, the value of automated regression testing increases.

- d. As coupling increases, integration becomes easier as everything is connected together.

- e. As coupling increases, maintainability decreases.

(a)(b)(c)(d)(e)

Explanation: High coupling means either more components require to be integrated at once in a big-bang fashion (increasing the risk of things going wrong) or more drivers and stubs are required when integrating incrementally.

Design → Design Fundamentals → Cohesion →

Cohesion is a measure of how strongly-related and focused the various responsibilities of a component are. A highly-cohesive component keeps related functionalities together while keeping out all other unrelated things.

Higher cohesion is better. Disadvantages of low cohesion (aka weak cohesion):

- Lowers the understandability of modules as it is difficult to express module functionalities at a higher level.

- Lowers maintainability because a module can be modified due to unrelated causes (reason: the module contains code unrelated to each other) or many many modules may need to be modified to achieve a small change in behavior (reason: because the code realated to that change is not localized to a single module).

- Lowers reusability of modules because they do not represent logical units of functionality.

“Only the GUI class should interact with the user. The GUI class should only concern itself with user interactions”. This statement follows from,

- a. A software design should promote separation of concerns in a design.

- b. A software design should increase cohesion of its components.

- c. A software design should follow single responsibility principle.

(a)(b)(c)

Explanation: By making ‘user interaction’ GUI class’ sole responsibility, we increase its cohesion. This is also in line with separation of concerns (i.e., we separated the concern of user interaction) and single responsibility principle (GUI class has only one responsibility).

[W9.2] Design Principles: Intermediate-Level

How Polymorphism Works

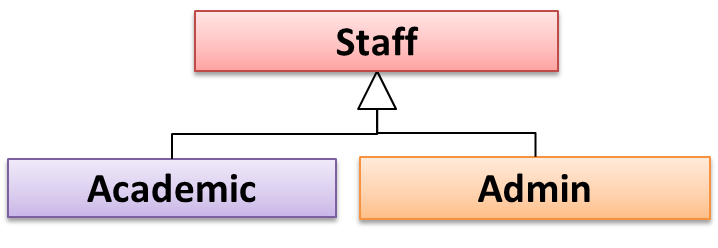

Can explain substitutability

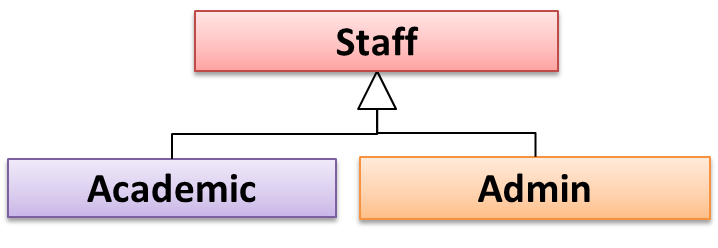

Every instance of a subclass is an instance of the superclass, but not vice-versa. As a result, inheritance allows substitutability : the ability to substitute a child class object where a parent class object is expected.

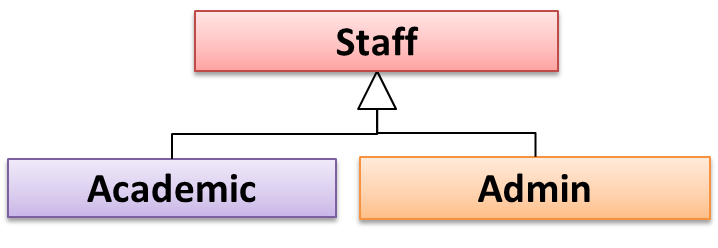

an Academic is an instance of a Staff, but a Staff is not necessarily an instance of an Academic. i.e. wherever an object of the superclass is expected, it can be substituted by an object of any of its subclasses.

The following code is valid because an AcademicStaff object is substitutable as a Staff object.

Staff staff = new AcademicStaff (); // OK

But the following code is not valid because staff is declared as a Staff type and therefore its value may or may not be of type AcademicStaff, which is the type expected by variable academicStaff.

Staff staff;

...

AcademicStaff academicStaff = staff; // Not OK

Can explain dynamic and static binding

Dynamic Binding (

Paradigms → Object Oriented Programming → Inheritance →

Method overriding is when a sub-class changes the behavior inherited from the parent class by re-implementing the method. Overridden methods have the same name, same type signature, and same return type.

Consider the following case of EvaluationReport class inheriting the Report class:

Report methods |

EvaluationReport methods |

Overrides? |

|---|---|---|

print() |

print() |

Yes |

write(String) |

write(String) |

Yes |

read():String |

read(int):String |

No. Reason: the two methods have different signatures; This is a case of |

Paradigms → Object Oriented Programming → Inheritance →

Method overloading is when there are multiple methods with the same name but different type signatures. Overloading is used to indicate that multiple operations do similar things but take different parameters.

Type Signature: The type signature of an operation is the type sequence of the parameters. The return type and parameter names are not part of the type signature. However, the parameter order is significant.

| Method | Type Signature |

|---|---|

int add(int X, int Y) |

(int, int) |

void add(int A, int B) |

(int, int) |

void m(int X, double Y) |

(int, double) |

void m(double X, int Y) |

(double, int) |

In the case below, the calculate method is overloaded because the two methods have the same name but different type signatures (String) and (int)

calculate(String): voidcalculate(int): void

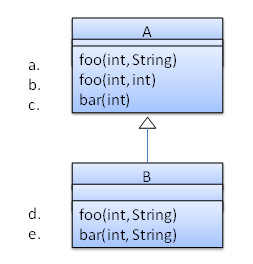

Which of these methods override another method? A is the parent class. B inherits A.

- a

- b

- c

- d

- e

d

Explanation: Method overriding requires a method in a child class to use the same method name and same parameter sequence used by one of its ancestors

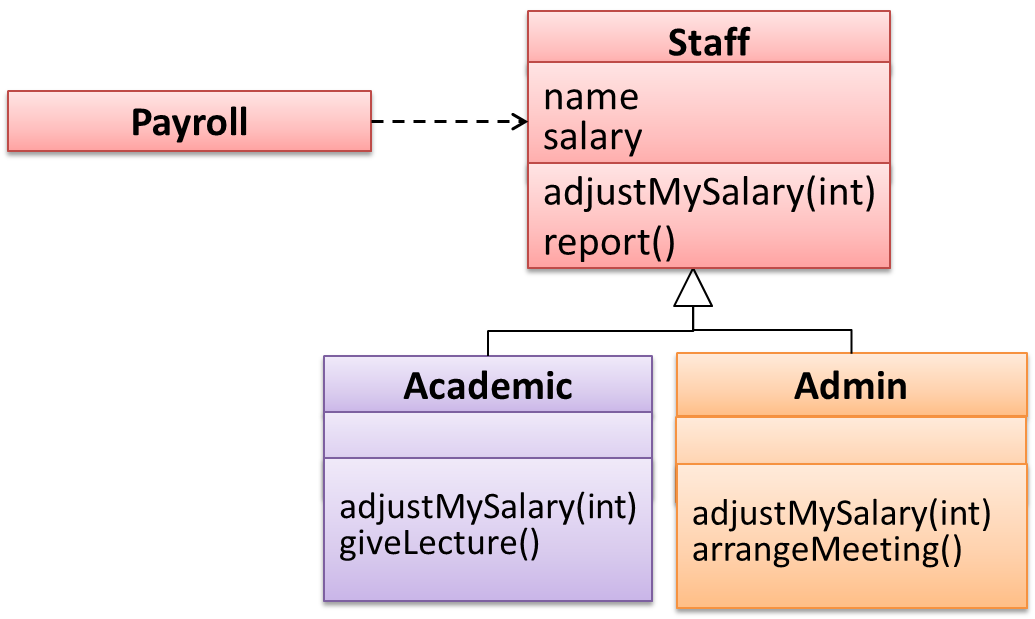

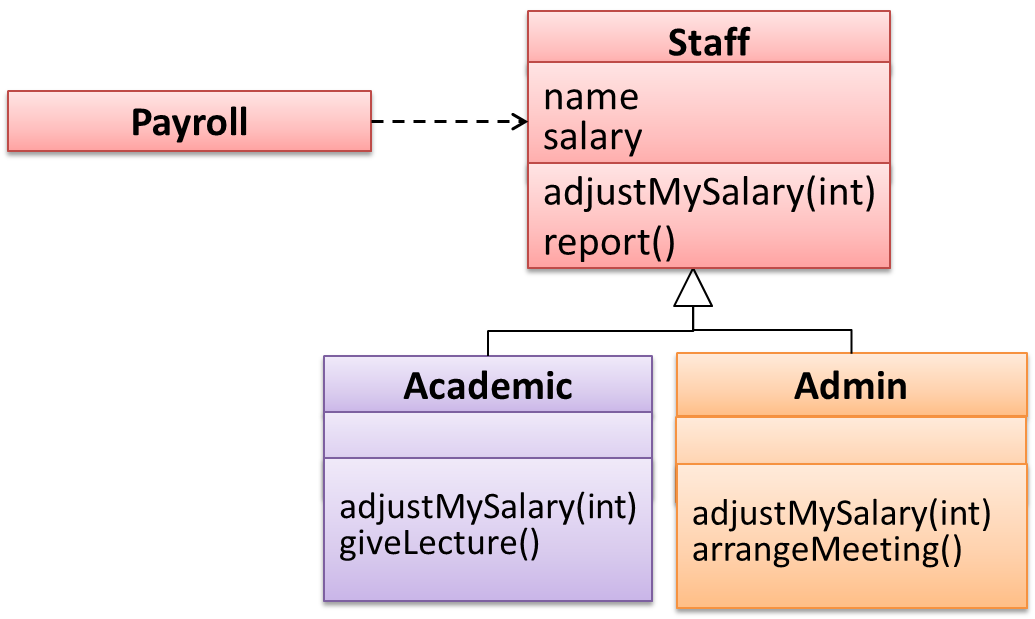

Consider the code below. The declared type of s is Staff and it appears as if the adjustSalary(int) operation of the Staff class is invoked.

void adjustSalary(int byPercent) {

for (Staff s: staff) {

s.adjustSalary(byPercent);

}

}

However, at runtime s can receive an object of any subclass of Staff. That means the adjustSalary(int) operation of the actual subclass object will be called. If the subclass does not override that operation, the operation defined in the superclass (in this case, Staff class) will be called.

Static binding (aka early binding): When a method call is resolved at compile time.

In contrast,

Paradigms → Object Oriented Programming → Inheritance →

Method overloading is when there are multiple methods with the same name but different type signatures. Overloading is used to indicate that multiple operations do similar things but take different parameters.

Type Signature: The type signature of an operation is the type sequence of the parameters. The return type and parameter names are not part of the type signature. However, the parameter order is significant.

| Method | Type Signature |

|---|---|

int add(int X, int Y) |

(int, int) |

void add(int A, int B) |

(int, int) |

void m(int X, double Y) |

(int, double) |

void m(double X, int Y) |

(double, int) |

In the case below, the calculate method is overloaded because the two methods have the same name but different type signatures (String) and (int)

calculate(String): voidcalculate(int): void

Note how the constructor is overloaded in the class below. The method call new Account() is bound to the first constructor at compile time.

class Account {

Account () {

// Signature: ()

...

}

Account (String name, String number, double balance) {

// Signature: (String, String, double)

...

}

}

Similarly, the calcuateGrade method is overloaded in the code below and a method call calculateGrade("A1213232") is bound to the second implementation, at compile time.

void calculateGrade (int[] averages) { ... }

void calculateGrade (String matric) { ... }

Can explain how substitutability operation overriding, and dynamic binding relates to polymorphism

Three concepts combine to achieve polymorphism: substitutability, operation overriding, and dynamic binding.

- Substitutability: Because of substitutability, you can write code that expects object of a parent class and yet use that code with objects of child classes. That is how polymorphism is able to treat objects of different types as one type.

- Overriding: To get polymorphic behavior from an operation, the operation in the superclass needs to be overridden in each of the subclasses. That is how overriding allows objects of different subclasses to display different behaviors in response to the same method call.

- Dynamic binding: Calls to overridden methods are bound to the implementation of the actual object's class dynamically during the runtime. That is how the polymorphic code can call the method of the parent class and yet execute the implementation of the child class.

Which one of these is least related to how OO programs achieve polymorphism?

(c)

Explanation: Operation overriding is the one that is related, not operation overloading.

More Design Principles

Can explain Liskov Substitution Principle

Liskov Substitution Principle (LSP): Derived classes must be substitutable for their base classes. -- proposed by Barbara Liskov

LSP sounds same as

Paradigms → Object Oriented Programming → Inheritance →

Every instance of a subclass is an instance of the superclass, but not vice-versa. As a result, inheritance allows substitutability : the ability to substitute a child class object where a parent class object is expected.

an Academic is an instance of a Staff, but a Staff is not necessarily an instance of an Academic. i.e. wherever an object of the superclass is expected, it can be substituted by an object of any of its subclasses.

The following code is valid because an AcademicStaff object is substitutable as a Staff object.

Staff staff = new AcademicStaff (); // OK

But the following code is not valid because staff is declared as a Staff type and therefore its value may or may not be of type AcademicStaff, which is the type expected by variable academicStaff.

Staff staff;

...

AcademicStaff academicStaff = staff; // Not OK

Suppose the Payroll class depends on the adjustMySalary(int percent) method of the Staff class. Furthermore, the Staff class states that the adjustMySalary method will work for all positive percent values. Both Admin and Academic classes override the adjustMySalary method.

Now consider the following:

Admin#adjustMySalarymethod works for both negative and positive percent values.Academic#adjustMySalarymethod works for percent values1..100only.

In the above scenario,

Adminclass follows LSP because it fulfillsPayroll’s expectation ofStaffobjects (i.e. it works for all positive values). SubstitutingAdminobjects for Staff objects will not break thePayrollclass functionality.Academicclass violates LSP because it will not work for percent values over100as expected by thePayrollclass. SubstitutingAcademicobjects forStaffobjects can potentially break thePayrollclass functionality.

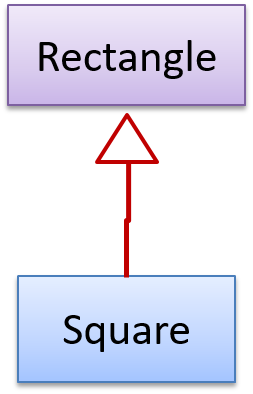

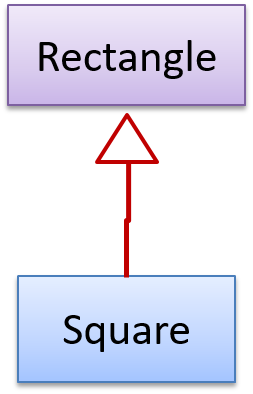

The Rectangle#resize() can take any integers for height and width. This contract is violated by the subclass Square#resize() because it does not accept a height that is different from the width.

class Rectangle {

...

/** sets the size to given height and width*/

void resize(int height, int width){

...

}

}

class Square extends Rectangle {

@Override

void resize(int height, int width){

if (height != width) {

//error

}

}

}

Now consider the following method that is written to work with the Rectangle class.

void makeSameSize(Rectangle original, Rectangle toResize){

toResize.resize(original.getHeight(), original.getWidth());

}

This code will fail if it is called as maekSameSize(new Rectangle(12,8), new Square(4, 4)) That is, Square class is not substitutable for the Rectangle class.

If a subclass imposes more restrictive conditions than its parent class, it violates Liskov Substitution Principle.

True.

Explanation: If the subclass is more restrictive than the parent class, code that worked with the parent class may not work with the child class. Hence, the substitutability does not exist and LSP has been violated.

Can explain the Law of Demeter

Law of Demeter (LoD):

- An object should have limited knowledge of another object.

- An object should only interact with objects that are closely related to it.

Also known as

- Don’t talk to strangers.

- Principle of least knowledge

More concretely, a method m of an object O should invoke only the methods of the following kinds of objects:

- The object

Oitself - Objects passed as parameters of

m - Objects created/instantiated in

m(directly or indirectly) - Objects from the

direct association of O

The following code fragment violates LoD due to the reason: while b is a ‘friend’ of foo (because it receives it as a parameter), g is a ‘friend of a friend’ (which should be considered a ‘stranger’), and g.doSomething() is analogous to ‘talking to a stranger’.

void foo(Bar b) {

Goo g = b.getGoo();

g.doSomething();

}

LoD aims to prevent objects navigating internal structures of other objects.

An analogy for LoD can be drawn from Facebook. If Facebook followed LoD, you would not be allowed to see posts of friends of friends, unless they are your friends as well. If Jake is your friend and Adam is Jake’s friend, you should not be allowed to see Adam’s posts unless Adam is a friend of yours as well.

Explain the Law of Demeter using code examples. You are to make up your own code examples. Take Minesweeper as the basis for your code examples.

Let us take the Logic class as an example. Assume that it has the following operation.

setMinefield(Minefiled mf):void

Consider the following that can happen inside this operation.

mf.init();: this does not violate LoD since LoD allows calling operations of parameters received.mf.getCell(1,3).clear();: //this violates LoD becauseLogicis handlingCellobjects deep insideMinefield. Instead, it should bemf.clearCellAt(1,3);timer.start();: //this does not violate LoD becausetimerappears to be an internal component (i.e. a variable) ofLogicitself.Cell c = new Cell();c.init();: // this does not violate LoD becausecwas created inside the operation.

This violates Law of Demeter.

void foo(Bar b) {

Goo g = new Goo();

g.doSomething();

}

False

Explanation: The line g.doSomething() does not violate LoD because it is OK to invoke methods of objects created within a method.

Pick the odd one out.

- a. Law of Demeter.

- b. Don’t add people to a late project.

- c. Don’t talk to strangers.

- d. Principle of least knowledge.

- e. Coupling.

(b)

Explanation: Law of Demeter, which aims to reduce coupling, is also known as ‘Don’t talk to strangers’ and ‘Principle of least knowledge’.

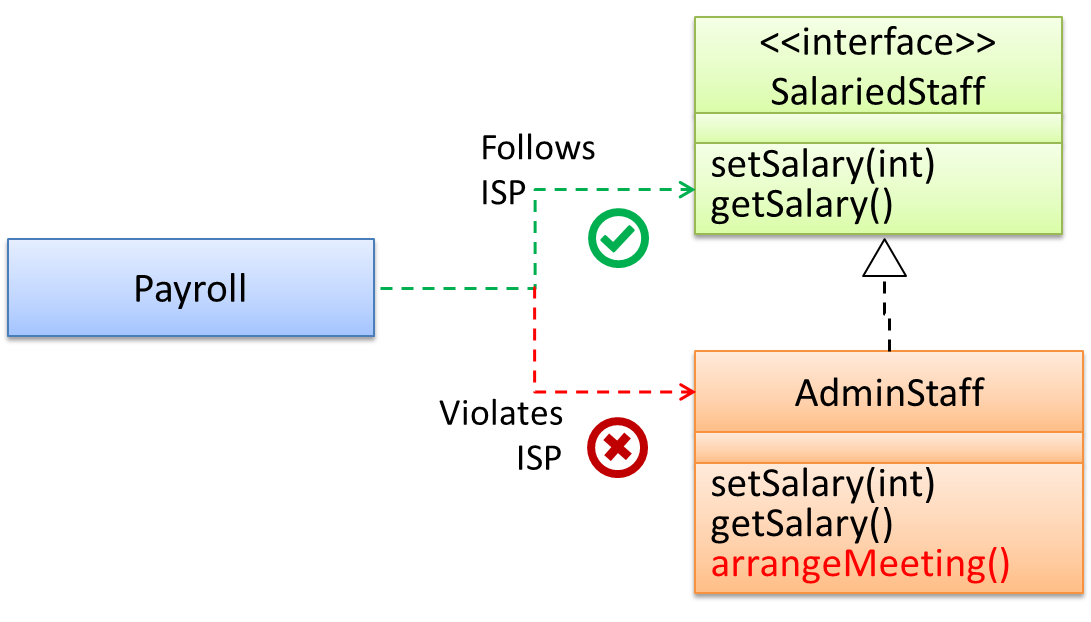

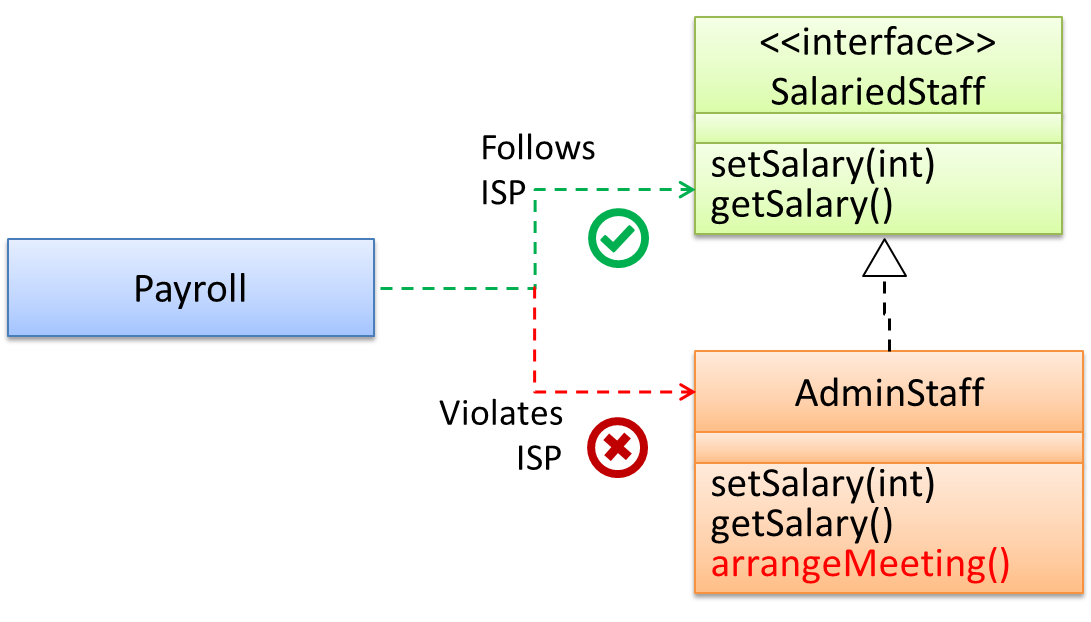

Can explain interface segregation principle

Interface Segregation Principle (ISP): No client should be forced to depend on methods it does not use.

The Payroll class should not depend on the AdminStaff class because it does not use the arrangeMeeting() method. Instead, it should depend on the SalariedStaff interface.

public class Payroll {

//...

private void adjustSalaries(AdminStaff adminStaff){ //violates ISP

//...

}

}

public class Payroll {

//...

private void adjustSalaries(SalariedStaff staff){ //does not violate ISP

//...

}

}

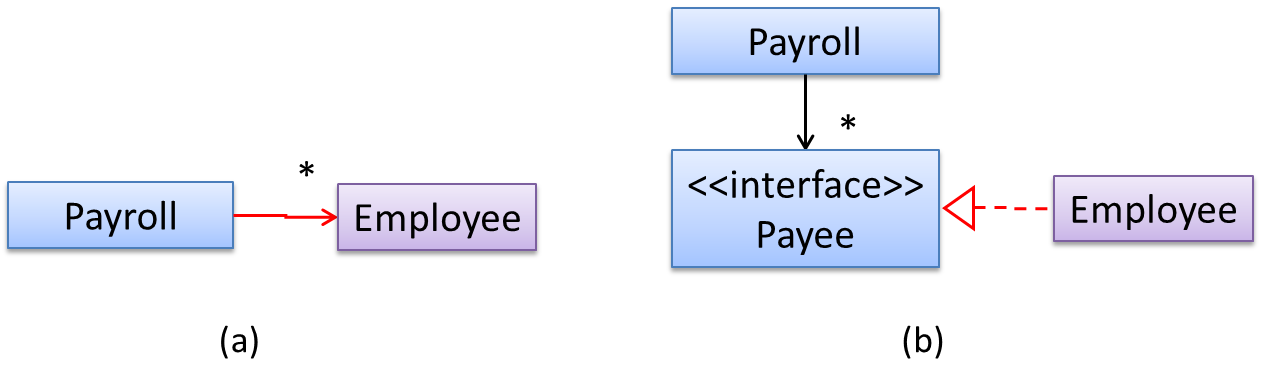

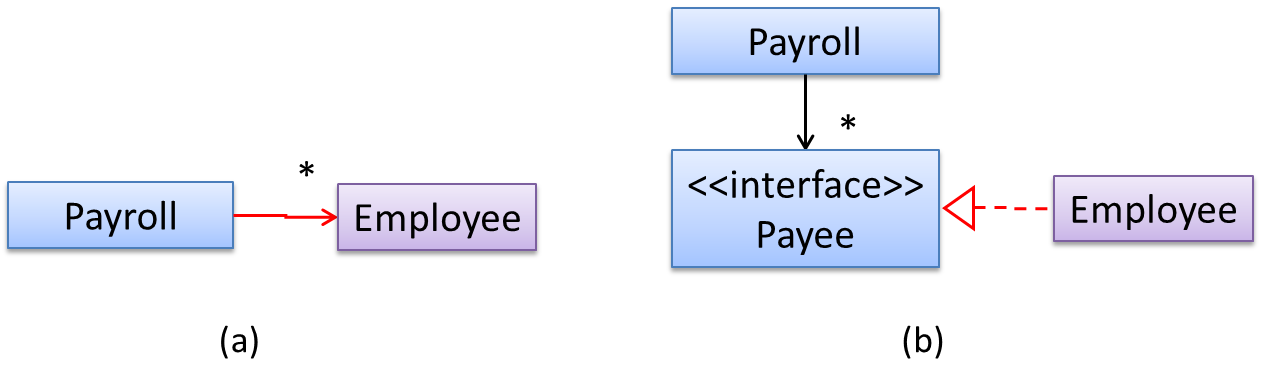

Can explain dependency inversion principle (DIP)

The Dependency Inversion Principle states that,

- High-level modules should not depend on low-level modules. Both should depend on abstractions.

- Abstractions should not depend on details. Details should depend on abstractions.

Example:

In design (a), the higher level class Payroll depends on the lower level class Employee, a violation of DIP. In design (b), both Payroll and Employee depends on the Payee interface (note that inheritance is a dependency).

Design (b) is more flexible (and less coupled) because now the Payroll class need not change when the Employee class changes.

Which of these statements is true about the Dependency Inversion Principle.

- a. It can complicate the design/implementation by introducing extra abstractions, but it has some benefits.

- b. It is often used during testing, to replace dependencies with mocks.

- c. It reduces dependencies in a design.

- d. It advocates making higher level classes to depend on lower level classes.

- a. It can complicate the design/implementation by introducing extra abstractions, but it has some benefits.

- b. It is often used during testing, to replace dependencies with mocks.

- c. It reduces dependencies in a design.

- d. It advocates making higher level classes to depend on lower level classes.

Explanation: Replacing dependencies with mocks is Dependency Injection, not DIP. DIP does not reduce dependencies, rather, it changes the direction of dependencies. Yes, it can introduce extra abstractions but often the benefit can outweigh the extra complications.

Can explain SOLID Principles

The five OOP principles given below are known as SOLID Principles (an acronym made up of the first letter of each principle):

Supplmentary → Principles →

Single Responsibility Principle (SRP): A class should have one, and only one, reason to change. -- Robert C. Martin

If a class has only one responsibility, it needs to change only when there is a change to that responsibility.

Consider a TextUi class that does parsing of the user commands as well as interacting with the user. That class needs to change when the formatting of the UI changes as well as when the syntax of the user command changes. Hence, such a class does not follow the SRP.

Gather together the things that change for the same reasons. Separate those things that change for different reasons. ―Agile Software Development, Principles, Patterns, and Practices by Robert C. Martin

- An explanation of the SRP from www.oodesign.com

- Another explanation (more detailed) by Patkos Csaba

- A book chapter on SRP - A book chapter on SRP, written by the father of the principle itself Robert C Martin.

Supplmentary → Principles →

The Open-Close Principle aims to make a code entity easy to adapt and reuse without needing to modify the code entity itself.

Open-Closed Principle (OCP): A module should be open for extension but closed for modification. That is, modules should be written so that they can be extended, without requiring them to be modified. -- proposed by Bertrand Meyer

In object-oriented programming, OCP can be achieved in various ways. This often requires separating the specification (i.e. interface) of a module from its implementation.

In the design given below, the behavior of the CommandQueue class can be altered by adding more concrete Command subclasses. For example, by including a Delete class alongside List, Sort, and Reset, the CommandQueue can now perform delete commands without modifying its code at all. That is, its behavior was extended without having to modify its code. Hence, it was open to extensions, but closed to modification.

The behavior of a Java generic class can be altered by passing it a different class as a parameter. In the code below, the ArrayList class behaves as a container of Students in one instance and as a container of Admin objects in the other instance, without having to change its code. That is, the behavior of the ArrayList class is extended without modifying its code.

ArrayList students = new ArrayList< Student >();

ArrayList admins = new ArrayList< Admin >();

Which of these is closest to the meaning of the open-closed principle?

(a)

Explanation: Please refer the handout for the definition of OCP.

Supplmentary → Principles →

Liskov Substitution Principle (LSP): Derived classes must be substitutable for their base classes. -- proposed by Barbara Liskov

LSP sounds same as

Paradigms → Object Oriented Programming → Inheritance →

Every instance of a subclass is an instance of the superclass, but not vice-versa. As a result, inheritance allows substitutability : the ability to substitute a child class object where a parent class object is expected.

an Academic is an instance of a Staff, but a Staff is not necessarily an instance of an Academic. i.e. wherever an object of the superclass is expected, it can be substituted by an object of any of its subclasses.

The following code is valid because an AcademicStaff object is substitutable as a Staff object.

Staff staff = new AcademicStaff (); // OK

But the following code is not valid because staff is declared as a Staff type and therefore its value may or may not be of type AcademicStaff, which is the type expected by variable academicStaff.

Staff staff;

...

AcademicStaff academicStaff = staff; // Not OK

Suppose the Payroll class depends on the adjustMySalary(int percent) method of the Staff class. Furthermore, the Staff class states that the adjustMySalary method will work for all positive percent values. Both Admin and Academic classes override the adjustMySalary method.

Now consider the following:

Admin#adjustMySalarymethod works for both negative and positive percent values.Academic#adjustMySalarymethod works for percent values1..100only.

In the above scenario,

Adminclass follows LSP because it fulfillsPayroll’s expectation ofStaffobjects (i.e. it works for all positive values). SubstitutingAdminobjects for Staff objects will not break thePayrollclass functionality.Academicclass violates LSP because it will not work for percent values over100as expected by thePayrollclass. SubstitutingAcademicobjects forStaffobjects can potentially break thePayrollclass functionality.

The Rectangle#resize() can take any integers for height and width. This contract is violated by the subclass Square#resize() because it does not accept a height that is different from the width.

class Rectangle {

...

/** sets the size to given height and width*/

void resize(int height, int width){

...

}

}

class Square extends Rectangle {

@Override

void resize(int height, int width){

if (height != width) {

//error

}

}

}

Now consider the following method that is written to work with the Rectangle class.

void makeSameSize(Rectangle original, Rectangle toResize){

toResize.resize(original.getHeight(), original.getWidth());

}

This code will fail if it is called as maekSameSize(new Rectangle(12,8), new Square(4, 4)) That is, Square class is not substitutable for the Rectangle class.

If a subclass imposes more restrictive conditions than its parent class, it violates Liskov Substitution Principle.

True.

Explanation: If the subclass is more restrictive than the parent class, code that worked with the parent class may not work with the child class. Hence, the substitutability does not exist and LSP has been violated.

Supplmentary → Principles →

Interface Segregation Principle (ISP): No client should be forced to depend on methods it does not use.

The Payroll class should not depend on the AdminStaff class because it does not use the arrangeMeeting() method. Instead, it should depend on the SalariedStaff interface.

public class Payroll {

//...

private void adjustSalaries(AdminStaff adminStaff){ //violates ISP

//...

}

}

public class Payroll {

//...

private void adjustSalaries(SalariedStaff staff){ //does not violate ISP

//...

}

}

Supplmentary → Principles →

The Dependency Inversion Principle states that,

- High-level modules should not depend on low-level modules. Both should depend on abstractions.

- Abstractions should not depend on details. Details should depend on abstractions.

Example:

In design (a), the higher level class Payroll depends on the lower level class Employee, a violation of DIP. In design (b), both Payroll and Employee depends on the Payee interface (note that inheritance is a dependency).

Design (b) is more flexible (and less coupled) because now the Payroll class need not change when the Employee class changes.

Which of these statements is true about the Dependency Inversion Principle.

- a. It can complicate the design/implementation by introducing extra abstractions, but it has some benefits.

- b. It is often used during testing, to replace dependencies with mocks.

- c. It reduces dependencies in a design.

- d. It advocates making higher level classes to depend on lower level classes.

- a. It can complicate the design/implementation by introducing extra abstractions, but it has some benefits.

- b. It is often used during testing, to replace dependencies with mocks.

- c. It reduces dependencies in a design.

- d. It advocates making higher level classes to depend on lower level classes.

Explanation: Replacing dependencies with mocks is Dependency Injection, not DIP. DIP does not reduce dependencies, rather, it changes the direction of dependencies. Yes, it can introduce extra abstractions but often the benefit can outweigh the extra complications.

Can explain Brooks' law

Brooks' Law: Adding people to a late project will make it later. -- Fred Brooks (author of The Mythical Man-Month)

Explanation: The additional communication overhead will outweigh the benefit of adding extra manpower, especially if done near to a deadline.

Do the Brook’s Law apply to a school project? Justify.

Yes. Adding a new student to a project team can result in a slow-down of the project for a short period. This is because the new member needs time to learn the project and existing members will have to spend time helping the new guy get up to speed. If the project is already behind schedule and near a deadline, this could delay the delivery even further.

Which one of these (all attributed to Fred Brooks, the author of the famous SE book The Mythical Man-Month), is called the Brook’s law?

- a. All programmers are optimists.

- b. Good judgement comes from experience, and experience comes from bad judgement.

- c. The bearing of a child takes nine months, no matter how many women are assigned.

- d. Adding more manpower to an already late project makes it even later.

(d)

[W9.3] Integration Approaches

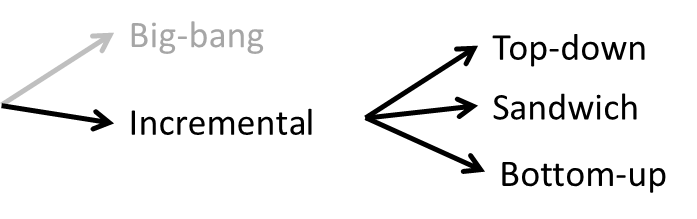

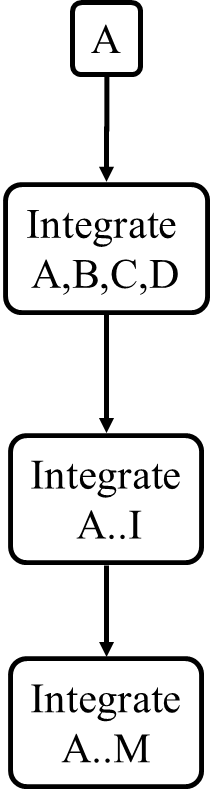

Can explain how integration approaches vary based on timing and frequency

In terms of timing and frequency, there are two general approaches to integration: late and one-time, early and frequent.

Late and one-time: wait till all components are completed and integrate all finished components near the end of the project.

This approach is not recommended because integration often causes many component incompatibilities (due to previous miscommunications and misunderstandings) to surface which can lead to delivery delays i.e. Late integration → incompatibilities found → major rework required → cannot meet the delivery date.

Early and frequent: integrate early and evolve each part in parallel, in small steps, re-integrating frequently.

A

Here is an animation that compares the two approaches:

Can explain how integration approaches vary based on amount merged at a time

Big-bang integration: integrate all components at the same time.

Big-bang is not recommended because it will uncover too many problems at the same time which could make debugging and bug-fixing more complex than when problems are uncovered incrementally.

Incremental integration: integrate few components at a time. This approach is better than the big-bang integration because it surfaces integration problems in a more manageable way.

Here is an animation that compares the two approaches:

Give two arguments in support and two arguments against the following statement.

Because there is no external client, it is OK to use big bang integration for a school project.

Arguments for:

- It is relatively simple; even big-bang can succeed.

- Project duration is short; there is not enough time to integrate in steps.

- The system is non-critical, non-production (demo only); the cost of integration issues is relatively small.

Arguments against:

- Inexperienced developers; big-bang more likely to fail

- Too many problems may be discovered too late. Submission deadline (fixed) can be missed.

- Team members have not worked together before; increases the probability of integration problems.

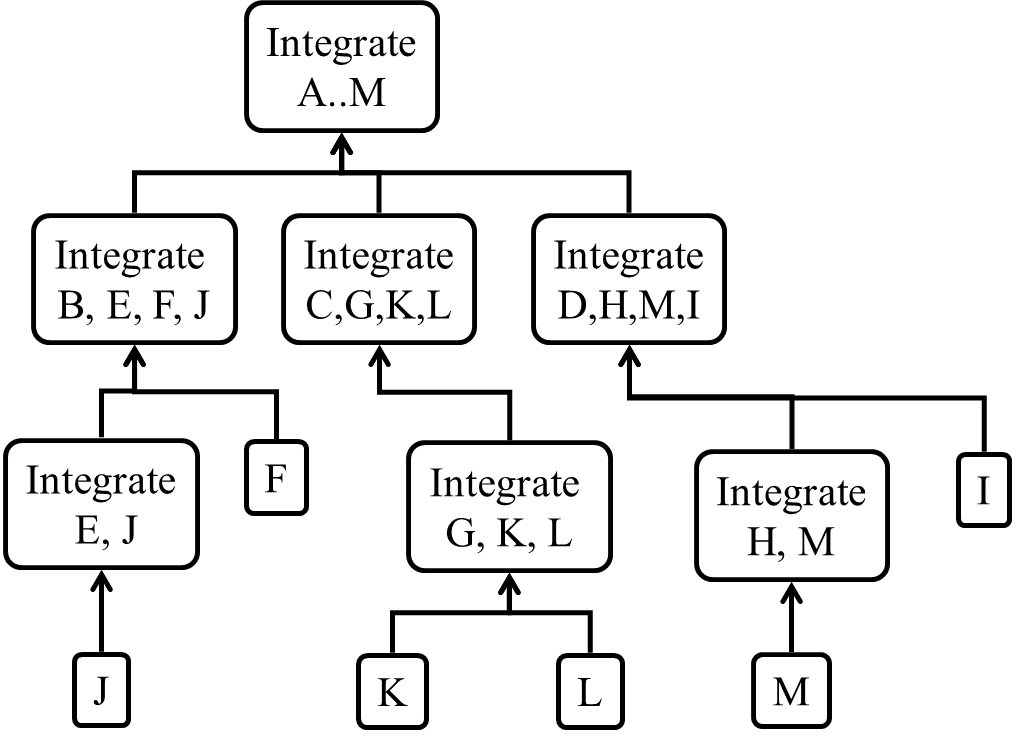

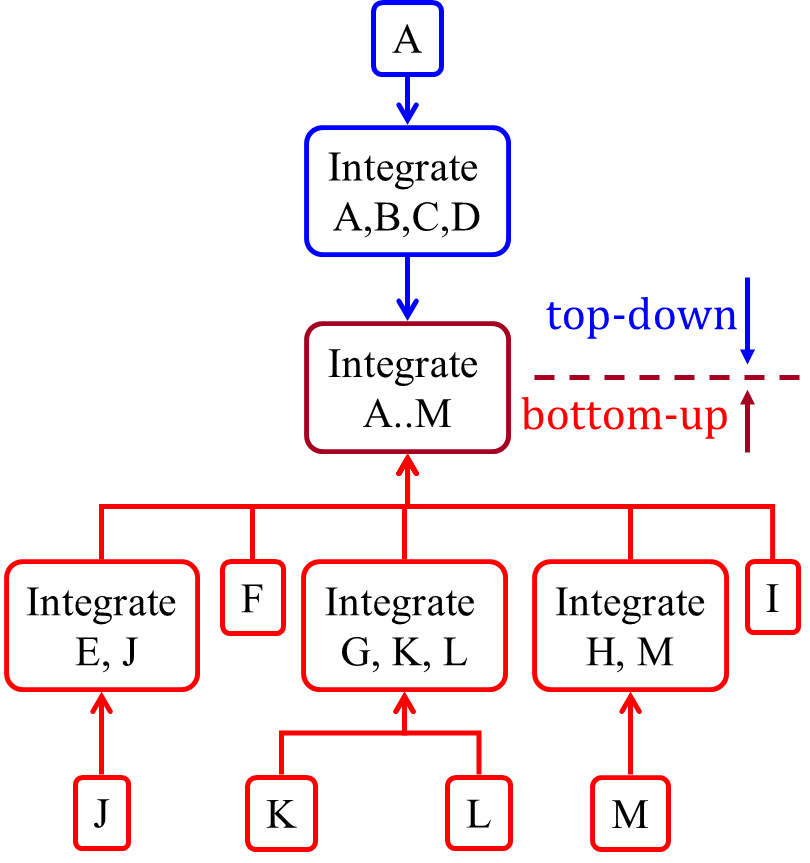

Can explain how integration approaches vary based on the order of integration

Based on the order in which components are integrated, incremental integration can be done in three ways.

Top-down integration: higher-level components are integrated before bringing in the lower-level components. One advantage of this approach is that higher-level problems can be discovered early. One disadvantage is that this requires the use of

Stub: A stub has the same interface as the component it replaces, but its implementation is so simple that it is unlikely to have any bugs. It mimics the responses of the component, but only for the a limited set of predetermined inputs. That is, it does not know how to respond to any other inputs. Typically, these mimicked responses are hard-coded in the stub rather than computed or retrieved from elsewhere, e.g. from a database.

Bottom-up integration: the reverse of top-down integration. Note that when integrating lower level components,

Sandwich integration: a mix of the top-down and the bottom-up approaches. The idea is to do both top-down and bottom-up so as to 'meet' in the middle.

Here is an animation that compares the three approaches:

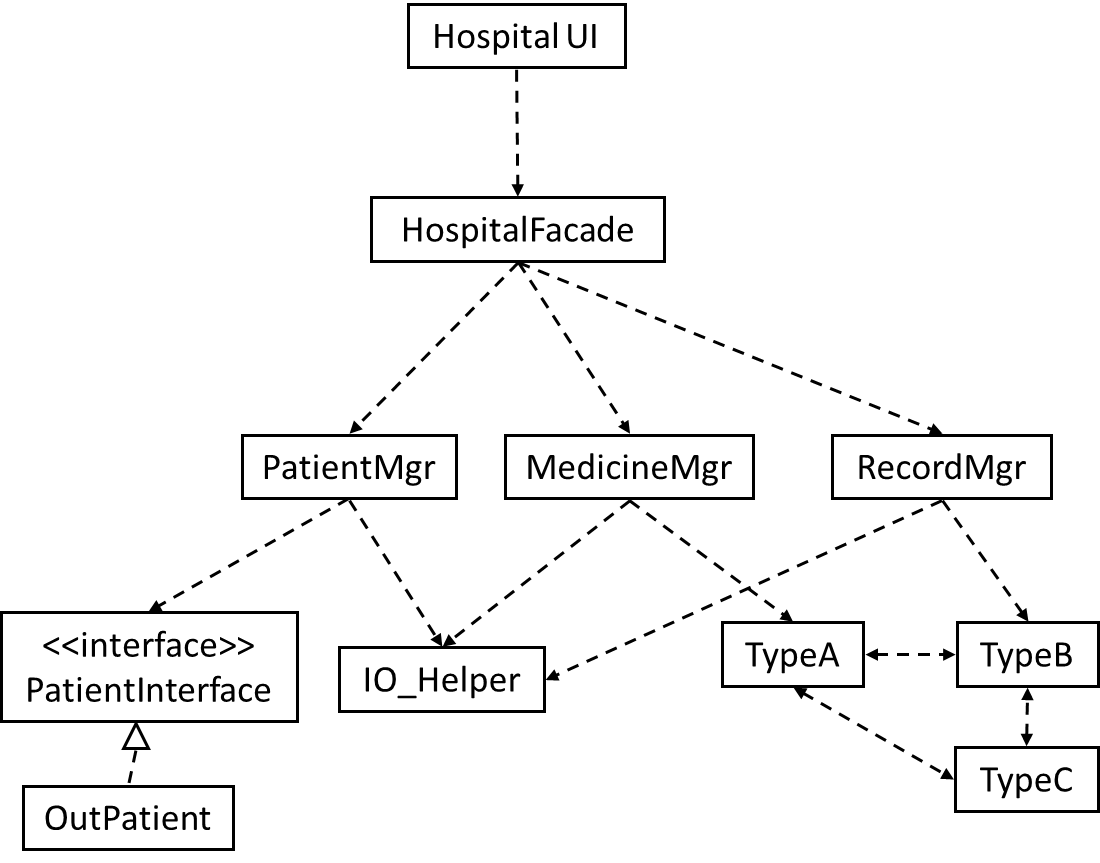

Suggest an integration strategy for the system represented by following diagram. You need not follow a strict top-down, bottom-up, sandwich, or big bang approach. Dashed arrows represent dependencies between classes.

Also take into account the following facts in your test strategy.

HospitalUIwill be developed early, so as to get customer feedback early.HospitalFacadeshields the UI from complexities of the application layer. It simply redirects the method calls received to the appropriate classes belowIO_Helperis to be reused from an earlier project, with minor modifications- Development of

OutPatientcomponent has been outsourced, and the delivery is not expected until the 2nd half of the project.

There can be many acceptable answers to this question. But any good strategy should consider at least some of the below.

- Because

HospitalUIwill be developed early, it’s OK to integrate it early, using stubs, rather than wait for the rest of the system to finish. (i.e. a top-down integration is suitable forHospitalUI) - Because

HospitalFacadeis unlikely to have a lot of business logic, it may not be worth to write stubs to test it (i.e. a bottom-up integration is better forHospitalFacade). - Because

IO_Helperis to be reused from an earlier project, we can finish it early. This is especially suitable since there are many classes that use it. ThereforeIO_Helpercan be integrated with the dependent classes in bottom-up fashion. - Because

OutPatientclass may be delayed, we may have to integratePatientMgrusing a stub. TypeA,TypeB, andTypeCseem to be tightly coupled. It may be a good idea to test them together.

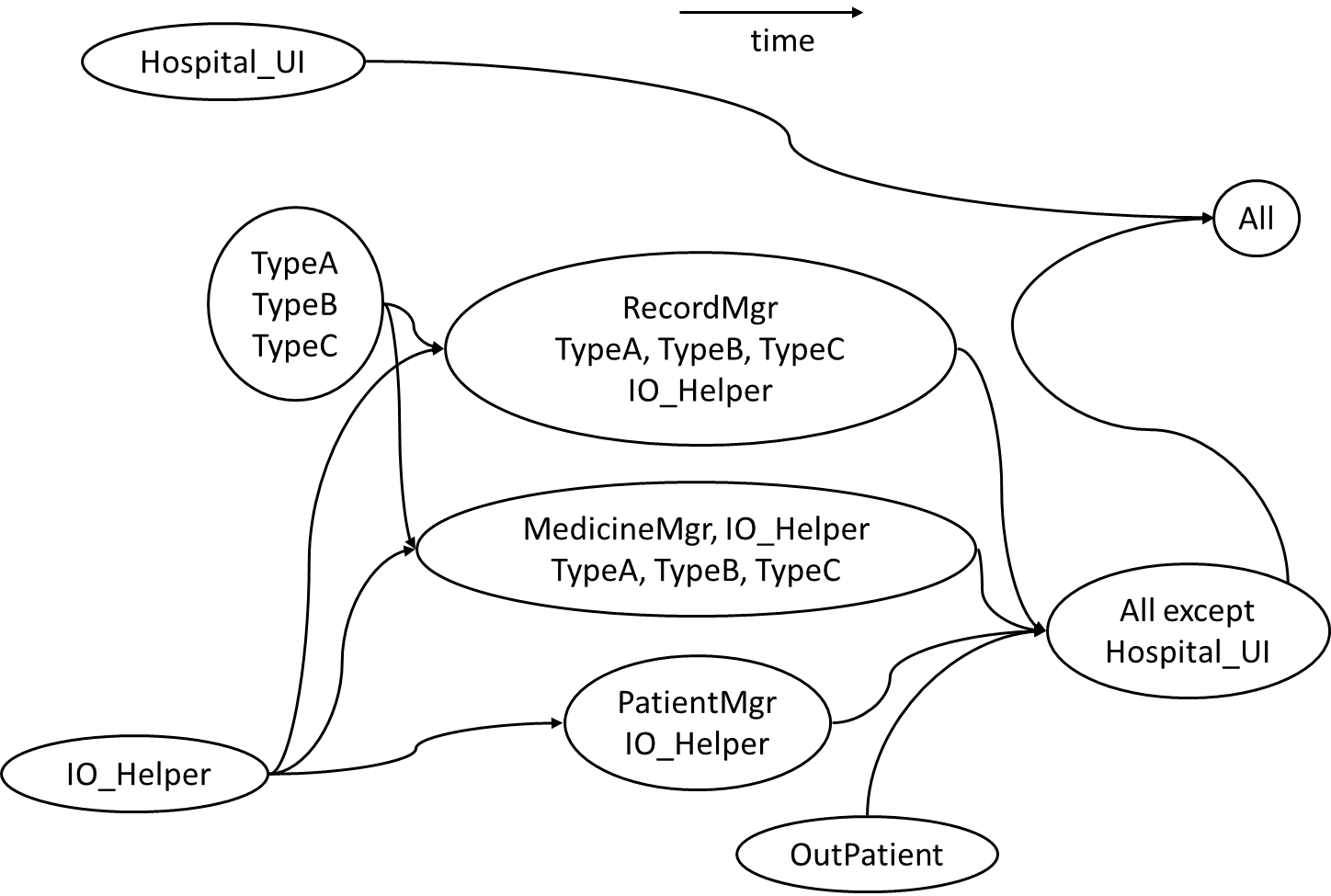

Given below is one possible integration test strategy. Relative positioning also indicates a rough timeline.

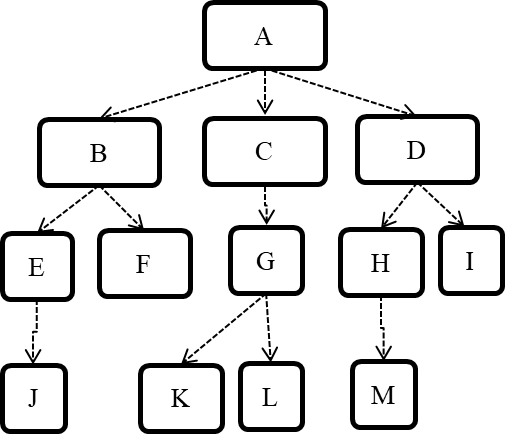

Consider the architecture given below. Describe the order in which components will be integrated with one another if the following integration strategies were adopted.

a) top-down b) bottom-up c) sandwich

Note that dashed arrows show dependencies (e.g. A depend on B, C, D and therefore, higher-level than B, C and D).

a) Diagram:

b) Diagram:

c) Diagram:

[W9.4] Types of Testing

Unit Testing

Can explain unit testing

Unit testing : testing individual units (methods, classes, subsystems, ...) to ensure each piece works correctly.

In OOP code, it is common to write one or more unit tests for each public method of a class.

Here are the code skeletons for a Foo class containing two methods and a FooTest class that contains unit tests for those two methods.

class Foo{

String read(){

//...

}

void write(String input){

//...

}

}

class FooTest{

@Test

void read(){

//a unit test for Foo#read() method

}

@Test

void write_emptyInput_exceptionThrown(){

//a unit tests for Foo#write(String) method

}

@Test

void write_normalInput_writtenCorrectly(){

//another unit tests for Foo#write(String) method

}

}

import unittest

class Foo:

def read(self):

# ...

def write(self, input):

# ...

class FooTest(unittest.TestCase):

def test_read(sefl):

# a unit test for read() method

def test_write_emptyIntput_ignored(self):

# a unit tests for write(string) method

def test_write_normalInput_writtenCorrectly(self):

# another unit tests for write(string) method

Side readings:

- [Web article] The three pillars of unit testing - A short article about what makes a good unit test.

- Learning from Apple’s #gotofail Security Bug - How unit testing (and other good coding practices) could have prevented a major security bug.

Can use stubs to isolate an SUT from its dependencies

A proper unit test requires the unit to be tested in isolation so that bugs in the

If a Logic class depends on a Storage class, unit testing the Logic class requires isolating the Logic class from the Storage class.

Stubs can isolate the

Stub: A stub has the same interface as the component it replaces, but its implementation is so simple that it is unlikely to have any bugs. It mimics the responses of the component, but only for the a limited set of predetermined inputs. That is, it does not know how to respond to any other inputs. Typically, these mimicked responses are hard-coded in the stub rather than computed or retrieved from elsewhere, e.g. from a database.

Consider the code below:

class Logic {

Storage s;

Logic(Storage s) {

this.s = s;

}

String getName(int index) {

return "Name: " + s.getName(index);

}

}

interface Storage {

String getName(int index);

}

class DatabaseStorage implements Storage {

@Override

public String getName(int index) {

return readValueFromDatabase(index);

}

private String readValueFromDatabase(int index) {

// retrieve name from the database

}

}

Normally, you would use the Logic class as follows (not how the Logic object depends on a DatabaseStorage object to perform the getName() operation):

Logic logic = new Logic(new DatabaseStorage());

String name = logic.getName(23);

You can test it like this:

@Test

void getName() {

Logic logic = new Logic(new DatabaseStorage());

assertEquals("Name: John", logic.getName(5));

}

However, this logic object being tested is making use of a DataBaseStorage object which means a bug in the DatabaseStorage class can affect the test. Therefore, this test is not testing Logic in isolation from its dependencies and hence it is not a pure unit test.

Here is a stub class you can use in place of DatabaseStorage:

class StorageStub implements Storage {

@Override

public String getName(int index) {

if(index == 5) {

return "Adam";

} else {

throw new UnsupportedOperationException();

}

}

}

Note how the stub has the same interface as the real dependency, is so simple that it is unlikely to contain bugs, and is pre-configured to respond with a hard-coded response, presumably, the correct response DatabaseStorage is expected to return for the given test input.

Here is how you can use the stub to write a unit test. This test is not affected by any bugs in the DatabaseStorage class and hence is a pure unit test.

@Test

void getName() {

Logic logic = new Logic(new StorageStub());

assertEquals("Name: Adam", logic.getName(5));

}

In addition to Stubs, there are other type of replacements you can use during testing. E.g. Mocks, Fakes, Dummies, Spies.

- Mocks Aren't Stubs by Martin Fowler -- An in-depth article about how Stubs differ from other types of test helpers.

Stubs help us to test a component in isolation from its dependencies.

True

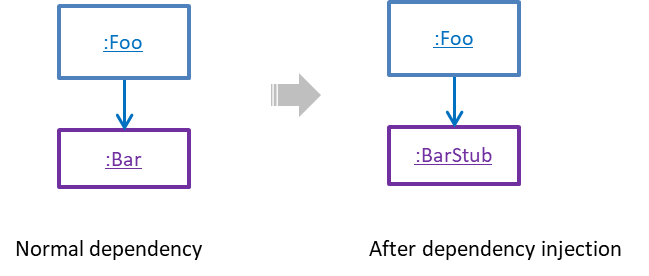

Can explain dependency injection

Dependency injection is the process of 'injecting' objects to replace current dependencies with a different object. This is often used to inject

Quality Assurance → Testing → Unit Testing →

A proper unit test requires the unit to be tested in isolation so that bugs in the

If a Logic class depends on a Storage class, unit testing the Logic class requires isolating the Logic class from the Storage class.

Stubs can isolate the

Stub: A stub has the same interface as the component it replaces, but its implementation is so simple that it is unlikely to have any bugs. It mimics the responses of the component, but only for the a limited set of predetermined inputs. That is, it does not know how to respond to any other inputs. Typically, these mimicked responses are hard-coded in the stub rather than computed or retrieved from elsewhere, e.g. from a database.

Consider the code below:

class Logic {

Storage s;

Logic(Storage s) {

this.s = s;

}

String getName(int index) {

return "Name: " + s.getName(index);

}

}

interface Storage {

String getName(int index);

}

class DatabaseStorage implements Storage {

@Override

public String getName(int index) {

return readValueFromDatabase(index);

}

private String readValueFromDatabase(int index) {

// retrieve name from the database

}

}

Normally, you would use the Logic class as follows (not how the Logic object depends on a DatabaseStorage object to perform the getName() operation):

Logic logic = new Logic(new DatabaseStorage());

String name = logic.getName(23);

You can test it like this:

@Test

void getName() {

Logic logic = new Logic(new DatabaseStorage());

assertEquals("Name: John", logic.getName(5));

}

However, this logic object being tested is making use of a DataBaseStorage object which means a bug in the DatabaseStorage class can affect the test. Therefore, this test is not testing Logic in isolation from its dependencies and hence it is not a pure unit test.

Here is a stub class you can use in place of DatabaseStorage:

class StorageStub implements Storage {

@Override

public String getName(int index) {

if(index == 5) {

return "Adam";

} else {

throw new UnsupportedOperationException();

}

}

}

Note how the stub has the same interface as the real dependency, is so simple that it is unlikely to contain bugs, and is pre-configured to respond with a hard-coded response, presumably, the correct response DatabaseStorage is expected to return for the given test input.

Here is how you can use the stub to write a unit test. This test is not affected by any bugs in the DatabaseStorage class and hence is a pure unit test.

@Test

void getName() {

Logic logic = new Logic(new StorageStub());

assertEquals("Name: Adam", logic.getName(5));

}

In addition to Stubs, there are other type of replacements you can use during testing. E.g. Mocks, Fakes, Dummies, Spies.

- Mocks Aren't Stubs by Martin Fowler -- An in-depth article about how Stubs differ from other types of test helpers.

Stubs help us to test a component in isolation from its dependencies.

True

A Foo object normally depends on a Bar object, but we can inject a BarStub object so that the Foo object no longer depends on a Bar object. Now we can test the Foo object in isolation from the Bar object.

Can use dependency injection

Polymorphism can be used to implement dependency injection, as can be seen in the example given in

Quality Assurance → Testing → Unit Testing →

A proper unit test requires the unit to be tested in isolation so that bugs in the

If a Logic class depends on a Storage class, unit testing the Logic class requires isolating the Logic class from the Storage class.

Stubs can isolate the

Stub: A stub has the same interface as the component it replaces, but its implementation is so simple that it is unlikely to have any bugs. It mimics the responses of the component, but only for the a limited set of predetermined inputs. That is, it does not know how to respond to any other inputs. Typically, these mimicked responses are hard-coded in the stub rather than computed or retrieved from elsewhere, e.g. from a database.

Consider the code below:

class Logic {

Storage s;

Logic(Storage s) {

this.s = s;

}

String getName(int index) {

return "Name: " + s.getName(index);

}

}

interface Storage {

String getName(int index);

}

class DatabaseStorage implements Storage {

@Override

public String getName(int index) {

return readValueFromDatabase(index);

}

private String readValueFromDatabase(int index) {

// retrieve name from the database

}

}

Normally, you would use the Logic class as follows (not how the Logic object depends on a DatabaseStorage object to perform the getName() operation):

Logic logic = new Logic(new DatabaseStorage());

String name = logic.getName(23);

You can test it like this:

@Test

void getName() {

Logic logic = new Logic(new DatabaseStorage());

assertEquals("Name: John", logic.getName(5));

}

However, this logic object being tested is making use of a DataBaseStorage object which means a bug in the DatabaseStorage class can affect the test. Therefore, this test is not testing Logic in isolation from its dependencies and hence it is not a pure unit test.

Here is a stub class you can use in place of DatabaseStorage:

class StorageStub implements Storage {

@Override

public String getName(int index) {

if(index == 5) {

return "Adam";

} else {

throw new UnsupportedOperationException();

}

}

}

Note how the stub has the same interface as the real dependency, is so simple that it is unlikely to contain bugs, and is pre-configured to respond with a hard-coded response, presumably, the correct response DatabaseStorage is expected to return for the given test input.

Here is how you can use the stub to write a unit test. This test is not affected by any bugs in the DatabaseStorage class and hence is a pure unit test.

@Test

void getName() {

Logic logic = new Logic(new StorageStub());

assertEquals("Name: Adam", logic.getName(5));

}

In addition to Stubs, there are other type of replacements you can use during testing. E.g. Mocks, Fakes, Dummies, Spies.

- Mocks Aren't Stubs by Martin Fowler -- An in-depth article about how Stubs differ from other types of test helpers.

Stubs help us to test a component in isolation from its dependencies.

True

Here is another example of using polymorphism to implement dependency injection:

Suppose we want to unit test the Payroll#totalSalary() given below. The method depends on the SalaryManager object to calculate the return value. Note how the setSalaryManager(SalaryManager) can be used to inject a SalaryManager object to replace the current SalaryManager object.

class Payroll {

private SalaryManager manager = new SalaryManager();

private String[] employees;

void setEmployees(String[] employees) {

this.employees = employees;

}

void setSalaryManager(SalaryManager sm) {

this. manager = sm;

}

double totalSalary() {

double total = 0;

for(int i = 0;i < employees.length; i++){

total += manager.getSalaryForEmployee(employees[i]);

}

return total;

}

}

class SalaryManager {

double getSalaryForEmployee(String empID){

//code to access employee’s salary history

//code to calculate total salary paid and return it

}

}

During testing, you can inject a SalaryManagerStub object to replace the SalaryManager object.

class PayrollTest {

public static void main(String[] args) {

//test setup

Payroll p = new Payroll();

p.setSalaryManager(new SalaryManagerStub()); //dependency injection

//test case 1

p.setEmployees(new String[]{"E001", "E002"});

assertEquals(2500.0, p.totalSalary());

//test case 2

p.setEmployees(new String[]{"E001"});

assertEquals(1000.0, p.totalSalary());

//more tests ...

}

}

class SalaryManagerStub extends SalaryManager {

/** Returns hard coded values used for testing */

double getSalaryForEmployee(String empID) {

if(empID.equals("E001")) {

return 1000.0;

} else if(empID.equals("E002")) {

return 1500.0;

} else {

throw new Error("unknown id");

}

}

}

Choose correct statement about dependency injection

- a. It is a technique for increasing dependencies

- b. It is useful for unit testing

- c. It can be done using polymorphism

- d. It can be used to substitute a component with a stub

(a)(b)(c)(d)

Explanation: It is a technique we can use to substitute an existing dependency with another, not increase dependencies. It is useful when you want to test a component in isolation but the SUT depends on other components. Using dependency injection, we can substitute those other components with test-friendly stubs. This is often done using polymorphism.

Integration Testing

Can explain integration testing

Integration testing : testing whether different parts of the software work together (i.e. integrates) as expected. Integration tests aim to discover bugs in the 'glue code' related to how components interact with each other. These bugs are often the result of misunderstanding of what the parts are supposed to do vs what the parts are actually doing.

Suppose a class Car users classes Engine and Wheel. If the Car class assumed a Wheel can support 200 mph speed but the actual Wheel can only support 150 mph, it is the integration test that is supposed to uncover this discrepancy.

Can use integration testing

Integration testing is not simply a repetition of the unit test cases but run using the actual dependencies (instead of the stubs used in unit testing). Instead, integration tests are additional test cases that focus on the interactions between the parts.

Suppose a class Car uses classes Engine and Wheel. Here is how you would go about doing pure integration tests:

a) First, unit test Engine and Wheel.

b) Next, unit test Car in isolation of Engine and Wheel, using stubs for Engine and Wheel.

c) After that, do an integration test for Car using it together with the Engine and Wheel classes to ensure the Car integrates properly with the Engine and the Wheel.

In practice, developers often use a hybrid of unit+integration tests to minimize the need for stubs.

Here's how a hybrid unit+integration approach could be applied to the same example used above:

(a) First, unit test Engine and Wheel.

(b) Next, unit test Car in isolation of Engine and Wheel, using stubs for Engine and Wheel.

(c) After that, do an integration test for Car using it together with the Engine and Wheel classes to ensure the Car integrates properly with the Engine and the Wheel. This step should include test cases that are meant to test the unit Car (i.e. test cases used in the step (b) of the example above) as well as test cases that are meant to test the integration of Car with Wheel and Engine (i.e. pure integration test cases used of the step (c) in the example above).

💡 Note that you no longer need stubs for Engine and Wheel. The downside is that Car is never tested in isolation of its dependencies. Given that its dependencies are already unit tested, the risk of bugs in Engine and Wheel affecting the testing of Car can be considered minimal.

System Testing

Can explain system testing

System testing: take the whole system and test it against the system specification.

System testing is typically done by a testing team (also called a QA team).

System test cases are based on the specified external behavior of the system. Sometimes, system tests go beyond the bounds defined in the specification. This is useful when testing that the system fails 'gracefully' having pushed beyond its limits.

Suppose the SUT is a browser supposedly capable of handling web pages containing up to 5000 characters. Given below is a test case to test if the SUT fails gracefully if pushed beyond its limits.

Test case: load a web page that is too big

* Input: load a web page containing more than 5000 characters.

* Expected behavior: abort the loading of the page and show a meaningful error message.

This test case would fail if the browser attempted to load the large file anyway and crashed.

System testing includes testing against non-functional requirements too. Here are some examples.

- Performance testing – to ensure the system responds quickly.

- Load testing (also called stress testing or scalability testing) – to ensure the system can work under heavy load.

- Security testing – to test how secure the system is.

- Compatibility testing, interoperability testing – to check whether the system can work with other systems.

- Usability testing – to test how easy it is to use the system.

- Portability testing – to test whether the system works on different platforms.

Can explain automated GUI testing

If a software product has a GUI component, all product-level testing (i.e. the types of testing mentioned above) need to be done using the GUI. However, testing the GUI is much harder than testing the CLI (command line interface) or API, for the following reasons:

- Most GUIs can support a large number of different operations, many of which can be performed in any arbitrary order.

- GUI operations are more difficult to automate than API testing. Reliably automating GUI operations and automatically verifying whether the GUI behaves as expected is harder than calling an operation and comparing its return value with an expected value. Therefore, automated regression testing of GUIs is rather difficult.

- The appearance of a GUI (and sometimes even behavior) can be different across platforms and even environments. For example, a GUI can behave differently based on whether it is minimized or maximized, in focus or out of focus, and in a high resolution display or a low resolution display.

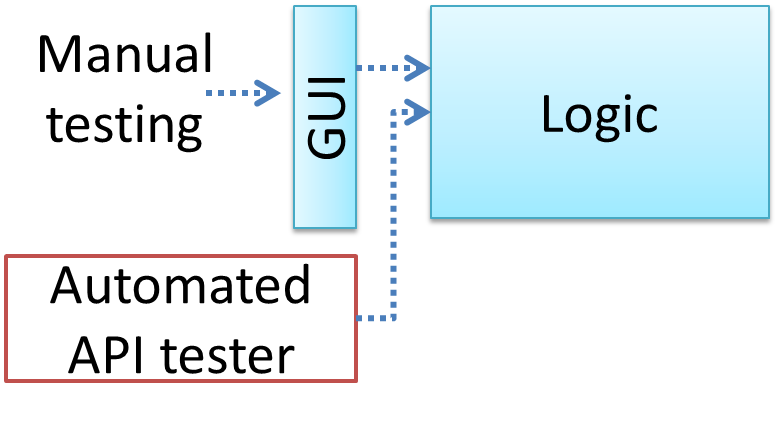

One approach to overcome the challenges of testing GUIs is to minimize logic aspects in the GUI. Then, bypass the GUI to test the rest of the system using automated API testing. While this still requires the GUI to be tested manually, the number of such manual test cases can be reduced as most of the system has been tested using automated API testing.

There are testing tools that can automate GUI testing.

Some tools used for automated GUI testing:

GUI testing is usually easier than API testing because it doesn’t require any extra coding.

False

Acceptance Testing

Can explain acceptance testing

Acceptance testing (aka User Acceptance Testing (UAT)): test the delivered system to ensure it meets the user requirements.

Acceptance tests give an assurance to the customer that the system does what it is intended to do. Acceptance test cases are often defined at the beginning of the project, usually based on the use case specification. Successful completion of UAT is often a prerequisite to the project sign-off.

Can explain the differences between system testing and acceptance testing

Acceptance testing comes after system testing. Similar to system testing, acceptance testing involves testing the whole system.

Some differences between system testing and acceptance testing:

| System Testing | Acceptance Testing |

|---|---|

| Done against the system specification | Done against the requirements specification |

| Done by testers of the project team | Done by a team that represents the customer |

| Done on the development environment or a test bed | Done on the deployment site or on a close simulation of the deployment site |

| Both negative and positive test cases | More focus on positive test cases |

Note: negative test cases: cases where the SUT is not expected to work normally e.g. incorrect inputs; positive test cases: cases where the SUT is expected to work normally

Requirement Specification vs System Specification

The requirement specification need not be the same as the system specification. Some example differences:

| Requirements Specification | System Specification |

|---|---|

| limited to how the system behaves in normal working conditions | can also include details on how it will fail gracefully when pushed beyond limits, how to recover, etc. specification |

| written in terms of problems that need to be solved (e.g. provide a method to locate an email quickly) | written in terms of how the system solve those problems (e.g. explain the email search feature) |

| specifies the interface available for intended end-users | could contain additional APIs not available for end-users (for the use of developers/testers) |

However, in many cases one document serves as both a requirement specification and a system specification.

Passing system tests does not necessarily mean passing acceptance testing. Some examples:

- The system might work on the testbed environments but might not work the same way in the deployment environment, due to subtle differences between the two environments.

- The system might conform to the system specification but could fail to solve the problem it was supposed to solve for the user, due to flaws in the system design.

Choose the correct statements about system testing and acceptance testing.

- a. Both system testing and acceptance testing typically involve the whole system.

- b. System testing is typically more extensive than acceptance testing.

- c. System testing can include testing for non-functional qualities.

- d. Acceptance testing typically has more user involvement than system testing.

- e. In smaller projects, the developers may do system testing as well, in addition to developer testing.

- f. If system testing is adequately done, we need not do acceptance testing.

(a)(b)(c)(d)(e)(f)

Explanation:

(b) is correct because system testing can aim to cover all specified behaviors and can even go beyond the system specification. Therefore, system testing is typically more extensive than acceptance testing.

(f) is incorrect because it is possible for a system to pass system tests but fail acceptance tests.

Alpha/Beta Testing

Can explain alpha and beta testing

Alpha testing is performed by the users, under controlled conditions set by the software development team.

Beta testing is performed by a selected subset of target users of the system in their natural work setting.

An open beta release is the release of not-yet-production-quality-but-almost-there software to the general population. For example, Google’s Gmail was in 'beta' for many years before the label was finally removed.

[W9.5] Writing Developer Documents

Type of Developer Docs

Can explain the two types of developer docs

Developer-to-developer documentation can be in one of two forms:

- Documentation for developer-as-user: Software components are written by developers and reused by other developers, which means there is a need to document how such components are to be used. Such documentation can take several forms:

- API documentation: APIs expose functionality in small-sized, independent and easy-to-use chunks, each of which can be documented systematically.

- Tutorial-style instructional documentation: In addition to explaining functions/methods independently, some higher-level explanations of how to use an API can be useful.

- Example of API Documentation: String API.

- Example of tutorial-style documentation: Java Internationalization Tutorial

- Example of API Documentation: string API.

- Example of tutorial-style documentation: How to use Regular Expressions in Python

- Documentation for developer-as-maintainer: There is a need to document how a system or a component is designed, implemented and tested so that other developers can maintain and evolve the code. Writing documentation of this type is harder because of the need to explain complex internal details. However, given that readers of this type of documentation usually have access to the source code itself, only some information need to be included in the documentation, as code (and code comments) can also serve as a complementary source of information.

- An example: se-edu/addressbook-level4 Developer Guide.

Choose correct statements about API documentation.

- a. They are useful for both developers who use the API and developers who maintain the API implementation.

- b. There are tools that can generate API documents from code comments.

- d. API documentation may contain code examples.

All

Guideline: Aim for Comprehensibility

Can explain the need for comprehensibility in documents

Technical documents exist to help others understand technical details. Therefore, it is not enough for the documentation to be accurate and comprehensive, it should also be comprehensible too.

Can write reasonably comprehensible developer documents

Here are some tips on writing effective documentation.

- Use plenty of diagrams: It is not enough to explain something in words; complement it with visual illustrations (e.g. a UML diagram).

- Use plenty of examples: When explaining algorithms, show a running example to illustrate each step of the algorithm, in parallel to worded explanations.

- Use simple and direct explanations: Convoluted explanations and fancy words will annoy readers. Avoid long sentences.

- Get rid of statements that do not add value: For example, 'We made sure our system works perfectly' (who didn't?), 'Component X has its own responsibilities' (of course it has!).

- It is not a good idea to have separate sections for each type of artifact, such as 'use cases', 'sequence diagrams', 'activity diagrams', etc. Such a structure, coupled with the indiscriminate inclusion of diagrams without justifying their need, indicates a failure to understand the purpose of documentation. Include diagrams when they are needed to explain something. If you want to provide additional diagrams for completeness' sake, include them in the appendix as a reference.

It is recommended for developer documents,

- a. to have separate sections for each type of diagrams such as class diagrams, sequence diagrams, use case diagrams etc.

- b. to give a high priority to comprehension too, not stop at comprehensiveness only.

(a)(b)

Explanation:

(a) Use diagrams when they help to understand the text descriptions. Text and diagrams should be used in tandem. Having separate sections for each diagram type is a sign of generating diagrams for the sake of having them.

(b) Both are important, but lengthy, complete, accurate yet hard to understand documents are not that useful.

Guideline: Describe Top-Down

Can distinguish between top-down and bottom up documentation

When writing project documents, a top-down breadth-first explanation is easier to understand than a bottom-up one.

Can explain the advantages of top-down documentation

The main advantage of the top-down approach is that the document is structured like an upside down tree (root at the top) and the reader can travel down a path she is interested in until she reaches the component she is interested to learn in-depth, without having to read the entire document or understand the whole system.

Can write documentation in a top-down manner

To explain a system called SystemFoo with two sub-systems, FrontEnd and BackEnd, start by describing the system at the highest level of abstraction, and progressively drill down to lower level details. An outline for such a description is given below.

[First, explain what the system is, in a black-box fashion (no internal details, only the external view).]

SystemFoois a ....

[Next, explain the high-level architecture of SystemFoo, referring to its major components only.]

SystemFooconsists of two major components:FrontEndandBackEnd.

The job ofFrontEndis to ... while the job ofBackEndis to ...

And this is howFrontEndandBackEndwork together ...

[Now we can drill down to FrontEnd's details.]

FrontEndconsists of three major components:A,B,C

A's job is to ...B's job is to...C's job is to...

And this is how the three components work together ...

[At this point, further drill down the internal workings of each component. A reader who is not interested in knowing nitty-gritty details can skip ahead to the section on BackEnd.]

In-depth description of

A

In-depth description ofB

...

[At this point drill down details of the BackEnd.]

...

Guideline: Minimal but Sufficient

Can explain documentation should be minimal yet sufficient

Aim for 'just enough' developer documentation.

- Writing and maintaining developer documents is an overhead. You should try to minimize that overhead.

- If the readers are developers who will eventually read the code, the documentation should complement the code and should provide only just enough guidance to get started.

Can write minimal yet sufficient documentation

Anything that is already clear in the code need not be described in words. Instead, focus on providing higher level information that is not readily visible in the code or comments.

Refrain from duplicating chunks or text. When describing several similar algorithms/designs/APIs, etc., do not simply duplicate large chunks of text. Instead, describe the similarity in one place and emphasize only the differences in other places. It is very annoying to see pages and pages of similar text without any indication as to how they differ from each other.

Drawing Architecture Diagrams

Can draw an architecture diagram