Week 10 [Mar 25]

RepoSense

- We use a new tool called

RepoSenseto identify your contributions to the project.- The tool is setup for the module and the report is available under the project-dashboard link.

- Ensure your code is RepoSense compatible. Instructions here.

- Follow the user guide for RepoSense and fix the issues, if you don't see your contributions detected.

[W10.1] Design Patterns: Basics

Introduction

Can explain design patterns

Design Pattern : An elegant reusable solution to a commonly recurring problem within a given context in software design.

In software development, there are certain problems that recur in a certain context.

Some examples of recurring design problems:

| Design Context | Recurring Problem |

|---|---|

| Assembling a system that makes use of other existing systems implemented using different technologies | What is the best architecture? |

| UI needs to be updated when the data in application backend changes | How to initiate an update to the UI when data changes without coupling the backend to the UI? |

After repeated attempts at solving such problems, better solutions are discovered and refined over time. These solutions are known as design patterns, a term popularized by the seminal book Design Patterns: Elements of Reusable Object-Oriented Software by the so-called "Gang of Four" (GoF) written by Eric Gamma, Richard Helm, Ralph Johnson,and John Vlissides.

Which one of these describes the ‘software design patterns’ concept best?

(b)

Can explain design patterns format

The common format to describe a pattern consists of the following components:

- Context: The situation or scenario where the design problem is encountered.

- Problem: The main difficulty to be resolved.

- Solution: The core of the solution. It is important to note that the solution presented only includes the most general details, which may need further refinement for a specific context.

- Anti-patterns (optional): Commonly used solutions, which are usually incorrect and/or inferior to the Design Pattern.

- Consequences (optional): Identifying the pros and cons of applying the pattern.

- Other useful information (optional): Code examples, known uses, other related patterns, etc.

When we describe a pattern, we must also specify anti-patterns.

False.

Explanation: Anti-patterns are related to patterns, but they are not a ‘must have’ component of a pattern description.

Singleton pattern

Can explain the Singleton design pattern

Context

A certain classes should have no more than just one instance (e.g. the main controller class of the system). These single instances are commonly known as singletons.

Problem

A normal class can be instantiated multiple times by invoking the constructor.

Solution

Make the constructor of the singleton class private, because a public constructor will allow others to instantiate the class at will. Provide a public class-level method to access the single instance.

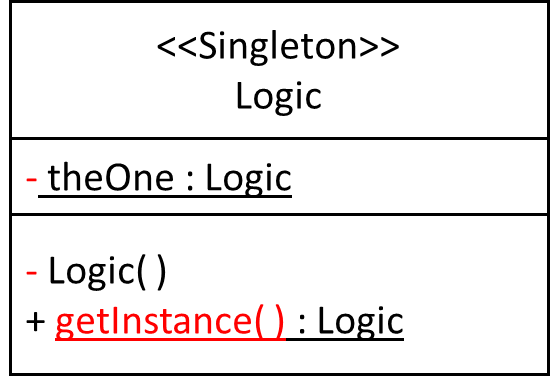

Example:

We use the Singleton pattern when

(c)

Can apply the Singleton design pattern

Here is the typical implementation of how the Singleton pattern is applied to a class:

class Logic {

private static Logic theOne = null;

private Logic() {

...

}

public static Logic getInstance() {

if (theOne == null) {

theOne = new Logic();

}

return theOne;

}

}

Notes:

- The constructor is

private, which prevents instantiation from outside the class. - The single instance of the singleton class is maintained by a

privateclass-level variable. - Access to this object is provided by a

publicclass-level operationgetInstance()which instantiates a single copy of the singleton class when it is executed for the first time. Subsequent calls to this operation return the single instance of the class.

If Logic was not a Singleton class, an object is created like this:

Logic m = new Logic();

But now, the Logic object needs to be accessed like this:

Logic m = Logic.getInstance();

Can decide when to apply Singleton design pattern

Pros:

- easy to apply

- effective in achieving its goal with minimal extra work

- provides an easy way to access the singleton object from anywhere in the code base

Cons:

- The singleton object acts like a global variable that increases coupling across the code base.

- In testing, it is difficult to replace Singleton objects with stubs (static methods cannot be overridden)

- In testing, singleton objects carry data from one test to another even when we want each test to be independent of the others.

Given there are some significant cons, it is recommended that you apply the Singleton pattern when, in addition to requiring only one instance of a class, there is a risk of creating multiple objects by mistake, and creating such multiple objects has real negative consequences.

Facade pattern

Can explain the Facade design pattern

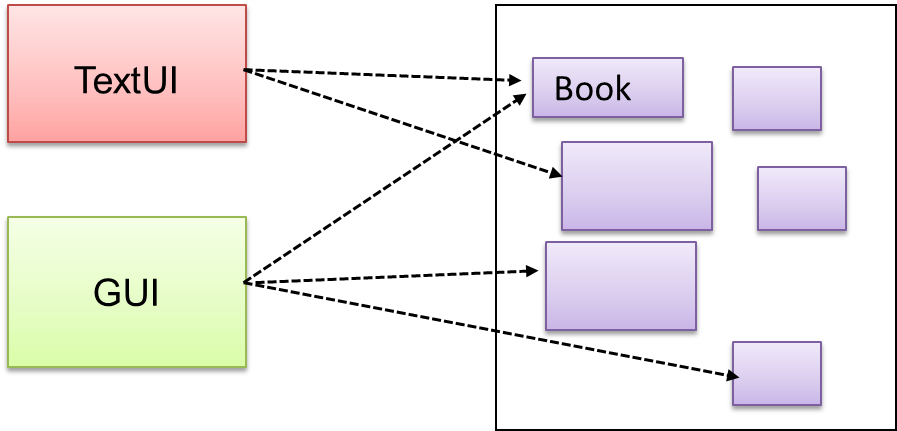

Context

Components need to access functionality deep inside other components.

The UI component of a Library system might want to access functionality of the Book class contained inside the Logic component.

Problem

Access to the component should be allowed without exposing its internal details. e.g. the UI component should access the functionality of the Logic component without knowing that it contained a Book class within it.

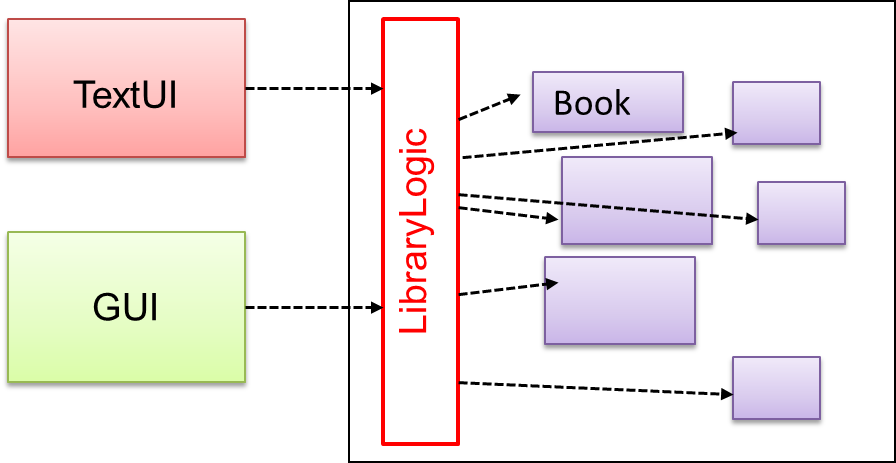

Solution

Include a

The following class diagram applies the Façade pattern to the Library System example. The LibraryLogic class is the Facade class.

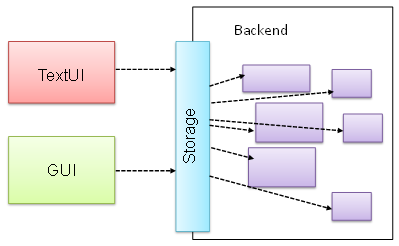

Does the design below likely to use the Facade pattern?

True.

Facade is clearly visible (Storage is the << Facade >> class).

Command pattern

Can explain the Command design pattern

Context

A system is required to execute a number of commands, each doing a different task. For example, a system might have to support Sort, List, Reset commands.

Problem

It is preferable that some part of the code executes these commands without having to know each command type. e.g., there can be a CommandQueue object that is responsible for queuing commands and executing them without knowledge of what each command does.

Solution

The essential element of this pattern is to have a general <<Command>> object that can be passed around, stored, executed, etc without knowing the type of command (i.e. via polymorphism).

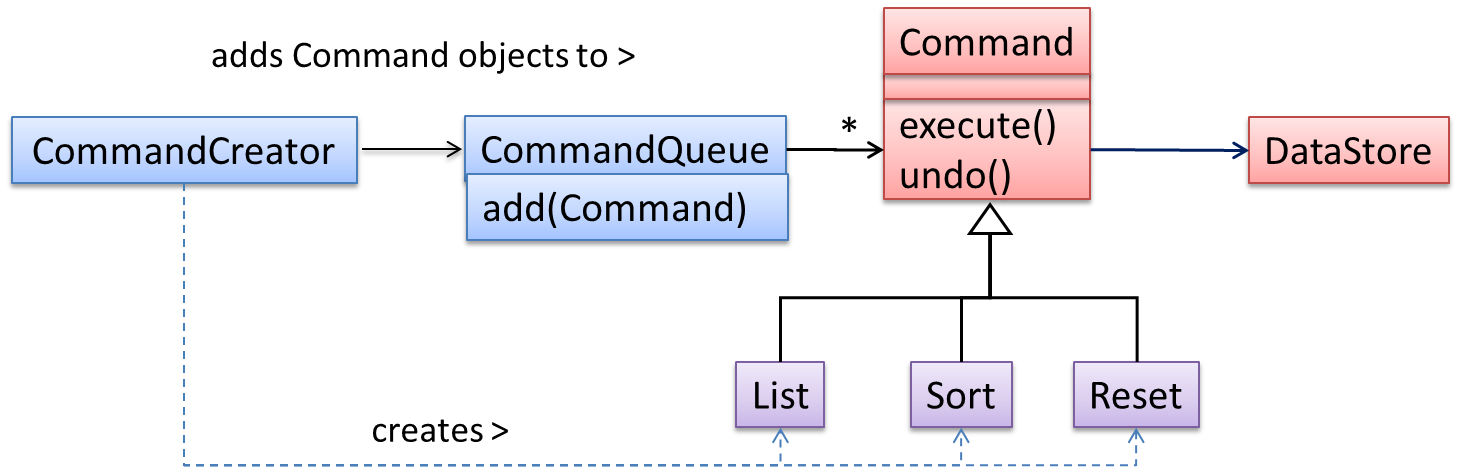

Let us examine an example application of the pattern first:

In the example solution below, the CommandCreator creates List, Sort, and Reset Command objects and adds them to the CommandQueue object. The CommandQueue object treats them all as Command objects and performs the execute/undo operation on each of them without knowledge of the specific Command type. When executed, each Command object will access the DataStore object to carry out its task. The Command class can also be an abstract class or an interface.

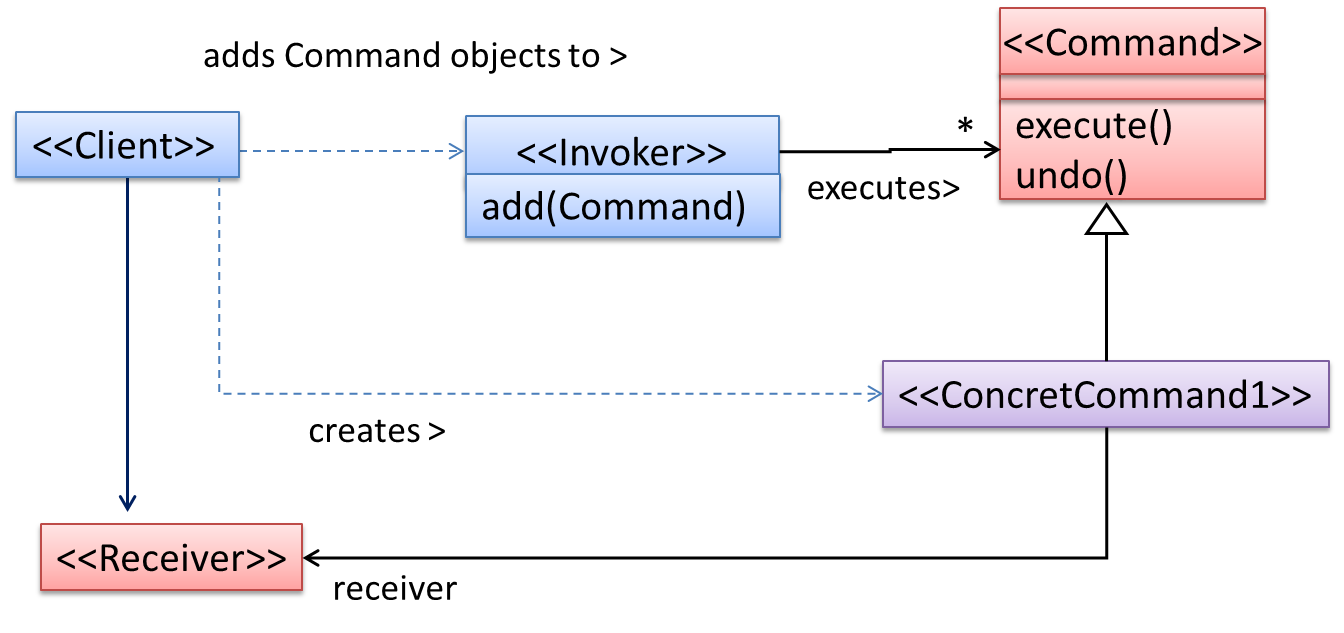

The general form of the solution is as follows.

The <<Client>> creates a <<ConcreteCommand>> object, and passes it to the <<Invoker>>. The <<Invoker>> object treats all commands as a general <<Command>> type. <<Invoker>> issues a request by calling execute() on the command. If a command is undoable, <<ConcreteCommand>> will store the state for undoing the command prior to invoking execute(). In addition, the <<ConcreteCommand>> object may have to be linked to any <<Receiver>> of the command (<<Invoker>>. Note that an application of the command pattern does not have to follow the structure given above.

Abstraction Occurrence pattern

Can explain the Abstraction Occurrence design pattern

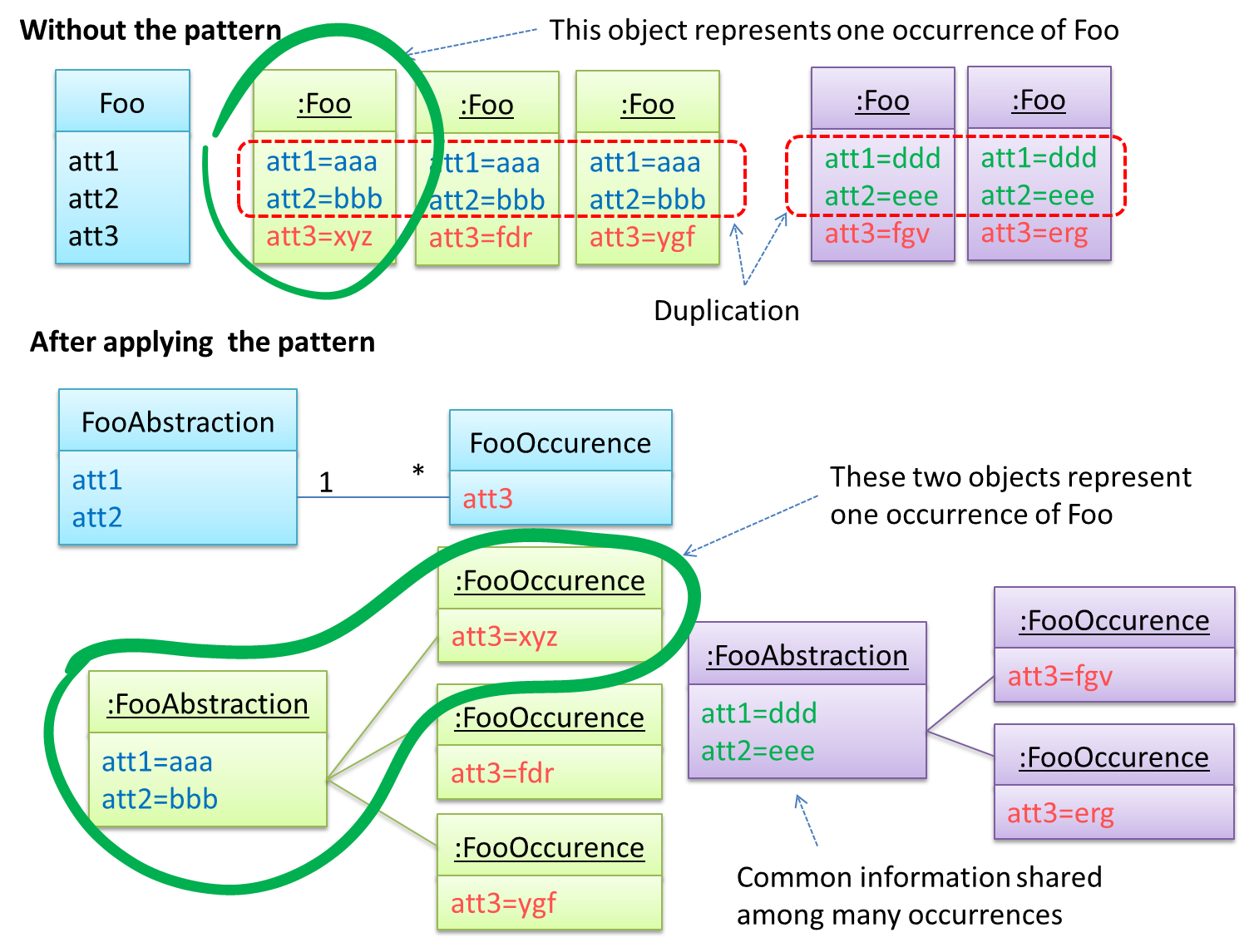

Context

There is a group of similar entities that appears to be ‘occurrences’ (or ‘copies’) of the same thing, sharing lots of common information, but also differing in significant ways.

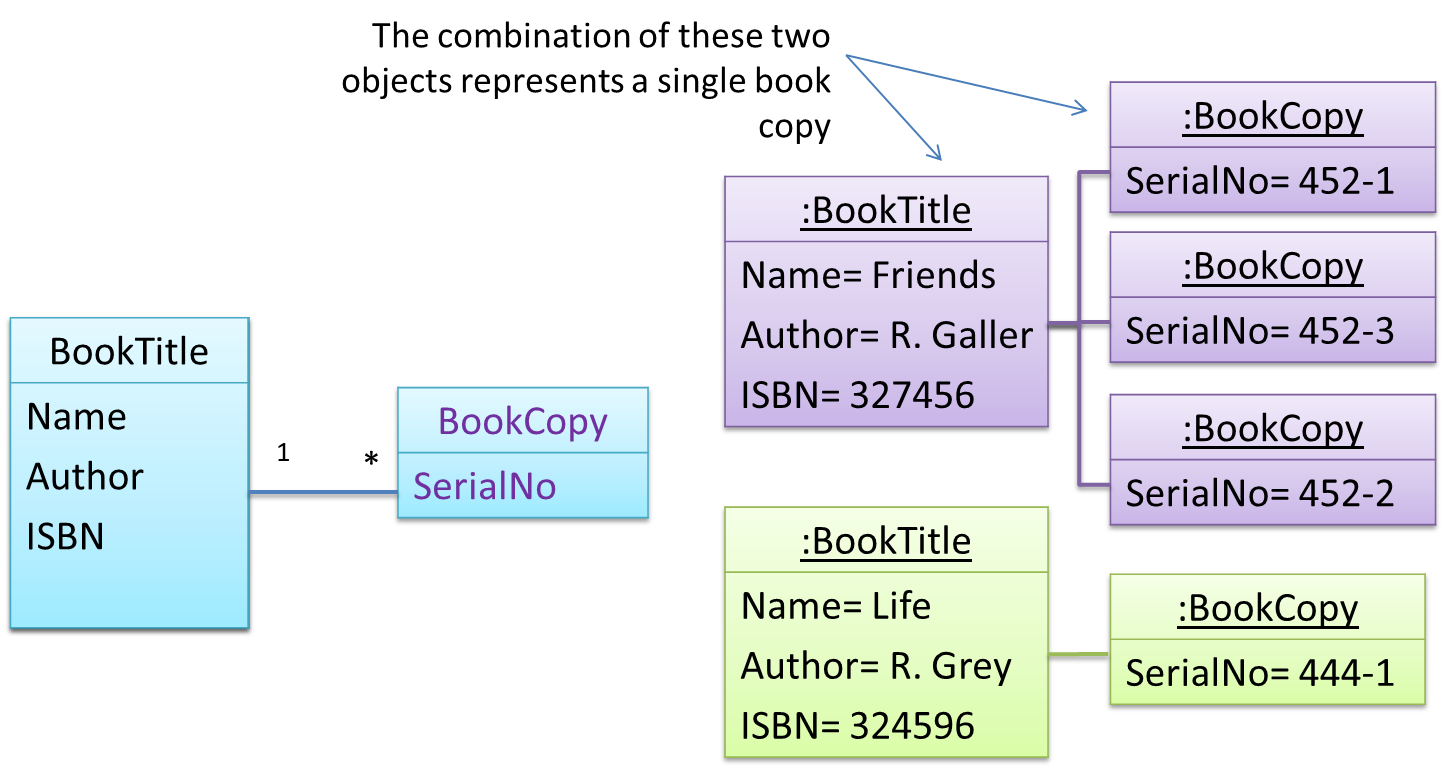

In a library, there can be multiple copies of same book title. Each copy shares common information such as book title, author, ISBN etc. However, there are also significant differences like purchase date and barcode number (assumed to be unique for each copy of the book).

Other examples:

- Episodes of the same TV series

- Stock items of the same product model (e.g. TV sets of the same model).

Problem

Representing the objects mentioned previously as a single class would be problematic because it results in duplication of data which can lead to inconsistencies in data (if some of the duplicates are not updated consistently).

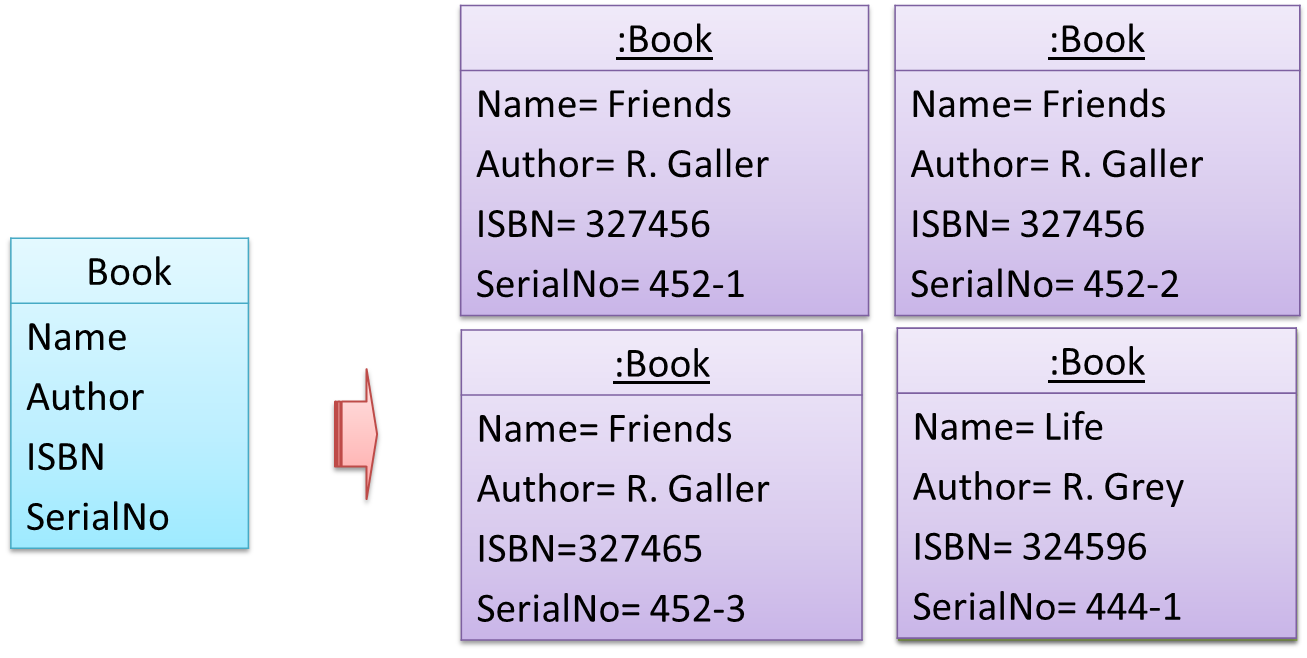

Take for example the problem of representing books in a library. Assume that there could be multiple copies of the same title, bearing the same ISBN number, but different serial numbers.

The above solution requires common information to be duplicated by all instances. This will not only waste storage space, but also creates a consistency problem. Suppose that after creating several copies of the same title, the librarian realized that the author name was wrongly spelt. To correct this mistake, the system needs to go through every copy of the same title to make the correction. Also, if a new copy of the title is added later on, the librarian (or the system) has to make sure that all information entered is the same as the existing copies to avoid inconsistency.

Anti-pattern

Refer to the same Library example given above.

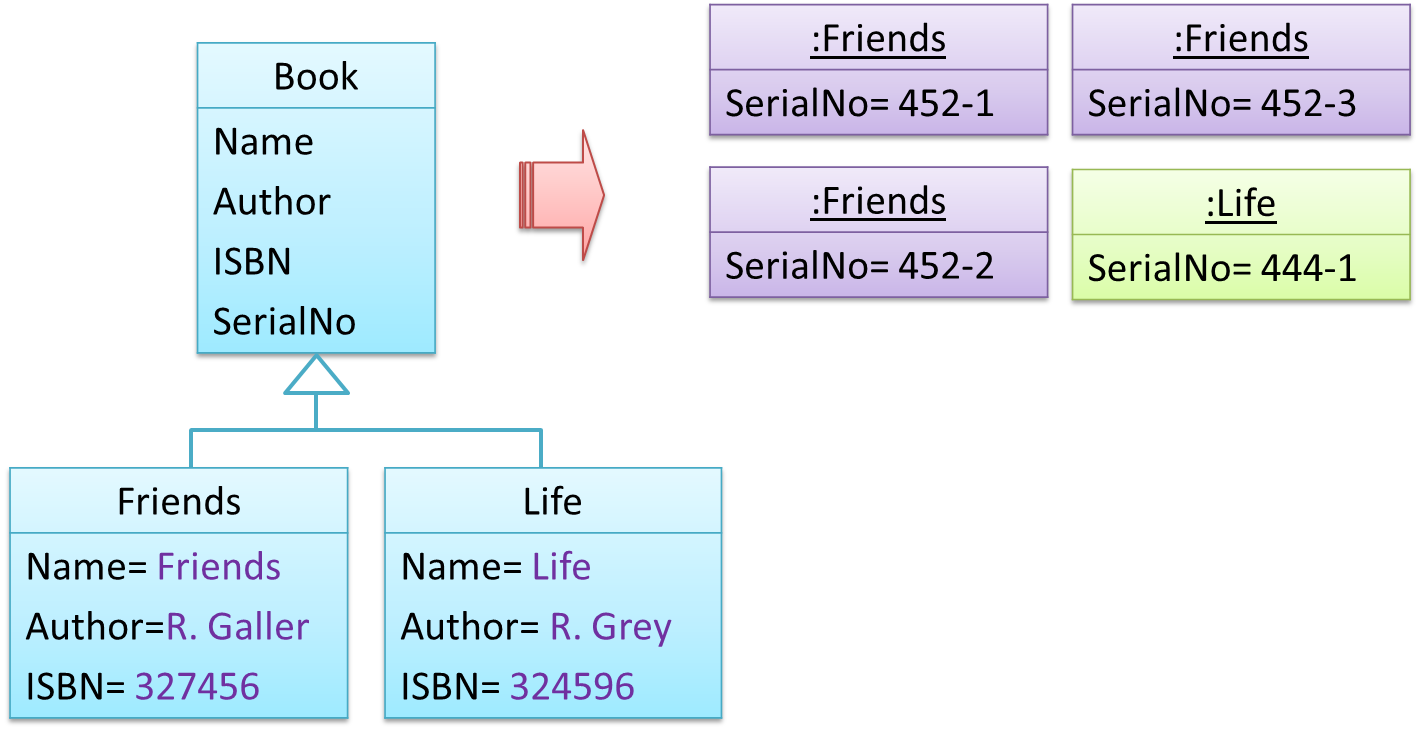

The design above segregates the common and unique information into a class hierarchy. Each book title is represented by a separate class with common data (i.e. Name, Author, ISBN) hard-coded in the class itself. This solution is problematic because each book title is represented as a class, resulting in thousands of classes (one for each title). Every time the library buys new books, the source code of the system will have to be updated with new classes.

Solution

Let a copy of an entity (e.g. a copy of a book)be represented by two objects instead of one, separating the common and unique information into two classes to avoid duplication.

Given below is how the pattern is applied to the Library example:

Here's a more generic example:

The general solution:

The << Abstraction >> class should hold all common information, and the unique information should be kept by the << Occurrence >> class. Note that ‘Abstraction’ and ‘Occurrence’ are not class names, but roles played by each class. Think of this diagram as a meta-model (i.e. a ‘model of a model’) of the BookTitle-BookCopy class diagram given above.

Which pairs of classes are likely to be the << Abstraction >> and the << Occurrence >> of the abstraction occurrence pattern?

- CarModel, Car. (Here CarModel represents a particular model of a car produced by the car manufacturer. E.g. BMW R4300)

- Car, Wheel

- Club, Member

- TeamLeader, TeamMember

- Magazine (E.g. ReadersDigest, PCWorld), MagazineIssue

One of the key things to keep in mind is that the << Abstraction >> does not represent a real entity. Rather, it represents some information common to a set of objects. A single real entity is represented by an object of << Abstraction >> type and << Occurrence >> type.

Before applying the pattern, some attributes have the same values for multiple objects. For example, w.r.t. the BookTitle-BookCopy example given in this handout, values of attributes such as book_title, ISBN are exactly the same for copies of the same book.

After applying the pattern, the Abstraction and the Occurrence classes together represent one entity. It is like one class has been split into two. For example, a BookTitle object and a BookCopy object combines to represent an actual Book.

- CarModel, Car: Yes

- Car, Wheel: No. Wheel is a ‘part of’ Car. A wheel is not an occurrence of Car.

- Club, Member: No. this is a ‘part of’ relationship.

- TeamLeader, TeamMember: No. A TeamMember is not an occurrence of a TeamLeader or vice versa.

- Magazine, MagazineIssue: Yes.

Which one of these is most suited for an application of the Abstraction Occurrence pattern?

(a)

Explanation:

(a) Stagings of a drama are ‘occurrences’ of the drama. They have many attributes common (e.g., Drama name, producer, cast, etc.) but some attributes are different (e.g., venue, time).

(b) Students are not occurrences of a Teacher or vice versa

(c) Module, Exam, Assignment are distinct entities with associations among them. But none of them can be considered an occurrence of another.

[W10.2] Testing: Intermediate Techniques

Can explain testability

Testability is an indication of how easy it is to test an SUT. As testability depends a lot on the design and implementation. You should try to increase the testability when you design and implement a software. The higher the testability, the easier it is to achieve a better quality software.

Can explain test coverage

Test coverage is a metric used to measure the extent to which testing exercises the code i.e., how much of the code is 'covered' by the tests.

Here are some examples of different coverage criteria:

- Function/method coverage : based on functions executed e.g., testing executed 90 out of 100 functions.

- Statement coverage : based on the number of line of code executed e.g., testing executed 23k out of 25k LOC.

- Decision/branch coverage : based on the decision points exercised e.g., an

ifstatement evaluated to bothtrueandfalsewith separate test cases during testing is considered 'covered'. - Condition coverage : based on the boolean sub-expressions, each evaluated to both true and false with different test cases. Condition coverage is not the same as the decision coverage.

if(x > 2 && x < 44) is considered one decision point but two conditions.

For 100% branch or decision coverage, two test cases are required:

(x > 2 && x < 44) == true: [e.g.x == 4](x > 2 && x < 44) == false: [e.g.x == 100]

For 100% condition coverage, three test cases are required

(x > 2) == true,(x < 44) == true: [e.g.x == 4](x < 44) == false: [e.g.x == 100](x > 2) == false: [e.g.x == 0]

- Path coverage measures coverage in terms of possible paths through a given part of the code executed. 100% path coverage means all possible paths have been executed. A commonly used notation for path analysis is called the Control Flow Graph (CFG).

- Entry/exit coverage measures coverage in terms of possible calls to and exits from the operations in the SUT.

Which of these gives us the highest intensity of testing?

(b)

Explanation: 100% path coverage implies all possible execution paths through the SUT have been tested. This is essentially ‘exhaustive testing’. While this is very hard to achieve for a non-trivial SUT, it technically gives us the highest intensity of testing. If all tests pass at 100% path coverage, the SUT code can be considered ‘bug free’. However, note that path coverage does not include paths that are missing from the code altogether because the programmer left them out by mistake.

Can explain how test coverage works

Measuring coverage is often done using coverage analysis tools. Most IDEs have inbuilt support for measuring test coverage, or at least have plugins that can measure test coverage.

Coverage analysis can be useful in improving the quality of testing e.g., if a set of test cases does not achieve 100% branch coverage, more test cases can be added to cover missed branches.

Measuring code coverage in Intellij IDEA

Can use intermediate features of JUnit

Some intermediate JUnit techniques that may be useful:

- It is possible for a JUnit test case to verify if the SUT throws the right exception.

- JUnit Rules are a way to add additional behavior to a test. e.g. to make a test case use a temporary folder for storing files needed for (or generated by) the test.

- It is possible to write methods thar are automatically run before/after a test method/class. These are useful to do pre/post cleanups for example.

- Testing private methods is possible, although not always necessray

Can explain TDD

Test-Driven Development(TDD)_ advocates writing the tests before writing the SUT, while evolving functionality and tests in small increments. In TDD you first define the precise behavior of the SUT using test cases, and then write the SUT to match the specified behavior. While TDD has its fair share of detractors, there are many who consider it a good way to reduce defects. One big advantage of TDD is that it guarantees the code is testable.

A) In TDD, we write all the test cases before we start writing functional code.

B) Testing tools such as Junit require us to follow TDD.

A) False

Explanation: No, not all. We proceed in small steps, writing tests and functional code in tandem, but writing the test before we write the corresponding functional code.

B) False

Explanation: They can be used for TDD, but they can be used without TDD too.

[W10.3] Test Case Design

Can explain the need for deliberate test case design

Except for trivial

Consider the test cases for adding a string object to a

- Add an item to an empty collection.

- Add an item when there is one item in the collection.

- Add an item when there are 2, 3, .... n items in the collection.

- Add an item that has an English, a French, a Spanish, ... word.

- Add an item that is the same as an existing item.

- Add an item immediately after adding another item.

- Add an item immediately after system startup.

- ...

Exhaustive testing of this operation can take many more test cases.

Program testing can be used to show the presence of bugs, but never to show their absence!

--Edsger Dijkstra

Every test case adds to the cost of testing. In some systems, a single test case can cost thousands of dollars e.g. on-field testing of flight-control software. Therefore, test cases need to be designed to make the best use of testing resources. In particular:

-

Testing should be effective i.e., it finds a high percentage of existing bugs e.g., a set of test cases that finds 60 defects is more effective than a set that finds only 30 defects in the same system.

-

Testing should be efficient i.e., it has a high rate of success (bugs found/test cases) a set of 20 test cases that finds 8 defects is more efficient than another set of 40 test cases that finds the same 8 defects.

For testing to be

Given below is the sample output from a text-based program TriangleDetector ithat determines whether the three input numbers make up the three sides of a valid triangle. List test cases you would use to test this software. Two sample test cases are given below.

C:\> java TriangleDetector

Enter side 1: 34

Enter side 2: 34

Enter side 3: 32

Can this be a triangle?: Yes

Enter side 1:

Sample test cases,

34,34,34: Yes

0, any valid, any valid: No

In addition to obvious test cases such as

- sum of two sides == third,

- sum of two sides < third ...

We may also devise some interesting test cases such as the ones depicted below.

Note that their applicability depends on the context in which the software is operating.

- Non-integer number, negative numbers,

0, numbers formatted differently (e.g.13F), very large numbers (e.g.MAX_INT), numbers with many decimal places, empty string, ... - Check many triangles one after the other (will the system run out of memory?)

- Backspace, tab, CTRL+C , …

- Introduce a long delay between entering data (will the program be affected by, say the screensaver?), minimize and restore window during the operation, hibernate the system in the middle of a calculation, start with invalid inputs (the system may perform error handling differently for the very first test case), …

- Test on different locale.

The main point to note is how difficult it is to test exhaustively, even on a trivial system.

Explain the why exhaustive testing is not practical using the example of testing newGame() operation in the Logic class of a Minesweeper game.

Consider this sequence of test cases:

- Test case 1. Start Minesweeper. Activate

newGame()and see if it works. - Test case 2. Start Minesweeper. Activate

newGame(). ActivatenewGame()again and see if it works. - Test case 3. Start Minesweeper. Activate

newGame()three times consecutively and see if it works. - …

- Test case 267. Start Minesweeper. Activate

newGame()267 times consecutively and see if it works.

Well, you get the idea. Exhaustive testing of newGame() is not practical.

Improving efficiency and effectiveness of test case design can,

- a. improve the quality of the SUT.

- b. save money.

- c. save time spent on test execution.

- d. save effort on writing and maintaining tests.

- e. minimize redundant test cases.

- f. forces us to understand the SUT better.

(a)(b)(c)(d)(e)(f)

Can explain exploratory testing and scripted testing

Here are two alternative approaches to testing a software: Scripted testing and Exploratory testing

-

Scripted testing: First write a set of test cases based on the expected behavior of the SUT, and then perform testing based on that set of test cases.

-

Exploratory testing: Devise test cases on-the-fly, creating new test cases based on the results of the past test cases.

Exploratory testing is ‘the simultaneous learning, test design, and test execution’

Here is an example thought process behind a segment of an exploratory testing session:

“Hmm... looks like feature x is broken. This usually means feature n and k could be broken too; we need to look at them soon. But before that, let us give a good test run to feature y because users can still use the product if feature y works, even if x doesn’t work. Now, if feature y doesn’t work 100%, we have a major problem and this has to be made known to the development team sooner rather than later...”

💡 Exploratory testing is also known as reactive testing, error guessing technique, attack-based testing, and bug hunting.

Exploratory Testing Explained, an online article by James Bach -- James Bach is an industry thought leader in software testing).

Scripted testing requires tests to be written in a scripting language; Manual testing is called exploratory testing.

A) False

Explanation: “Scripted” means test cases are predetermined. They need not be an executable script. However, exploratory testing is usually manual.

Which testing technique is better?

(e)

Explain the concept of exploratory testing using Minesweeper as an example.

When we test the Minesweeper by simply playing it in various ways, especially trying out those that are likely to be buggy, that would be exploratory testing.

Can explain the choice between exploratory testing and scripted testing

Which approach is better – scripted or exploratory? A mix is better.

The success of exploratory testing depends on the tester’s prior experience and intuition. Exploratory testing should be done by experienced testers, using a clear strategy/plan/framework. Ad-hoc exploratory testing by unskilled or inexperienced testers without a clear strategy is not recommended for real-world non-trivial systems. While exploratory testing may allow us to detect some problems in a relatively short time, it is not prudent to use exploratory testing as the sole means of testing a critical system.

Scripted testing is more systematic, and hence, likely to discover more bugs given sufficient time, while exploratory testing would aid in quick error discovery, especially if the tester has a lot of experience in testing similar systems.

In some contexts, you will achieve your testing mission better through a more scripted approach; in other contexts, your mission will benefit more from the ability to create and improve tests as you execute them. I find that most situations benefit from a mix of scripted and exploratory approaches. --

[source: bach-et-explained]

Exploratory Testing Explained, an online article by James Bach -- James Bach is an industry thought leader in software testing).

Scripted testing is better than exploratory testing.

B) False

Explanation: Each has pros and cons. Relying on only one is not recommended. A combination is better.

Can explain positive and negative test cases

A positive test case is when the test is designed to produce an expected/valid behavior. A negative test case is designed to produce a behavior that indicates an invalid/unexpected situation, such as an error message.

Consider testing of the method print(Integer i) which prints the value of i.

- A positive test case:

i == new Integer(50) - A negative test case:

i == null;

Can explain black box and glass box test case design

Test case design can be of three types, based on how much of SUT internal details are considered when designing test cases:

-

Black-box (aka specification-based or responsibility-based) approach: test cases are designed exclusively based on the SUT’s specified external behavior.

-

White-box (aka glass-box or structured or implementation-based) approach: test cases are designed based on what is known about the SUT’s implementation, i.e. the code.

-

Gray-box approach: test case design uses some important information about the implementation. For example, if the implementation of a sort operation uses different algorithms to sort lists shorter than 1000 items and lists longer than 1000 items, more meaningful test cases can then be added to verify the correctness of both algorithms.

Note: these videos are from the Udacity course Software Development Process by Georgia Tech

Can explain test case design for use case based testing

Use cases can be used for system testing and acceptance testing. For example, the main success scenario can be one test case while each variation (due to extensions) can form another test case. However, note that use cases do not specify the exact data entered into the system. Instead, it might say something like user enters his personal data into the system. Therefore, the tester has to choose data by considering equivalence partitions and boundary values. The combinations of these could result in one use case producing many test cases.

To increase

Every test case adds to the cost of testing. In some systems, a single test case can cost thousands of dollars e.g. on-field testing of flight-control software. Therefore, test cases need to be designed to make the best use of testing resources. In particular:

-

Testing should be effective i.e., it finds a high percentage of existing bugs e.g., a set of test cases that finds 60 defects is more effective than a set that finds only 30 defects in the same system.

-

Testing should be efficient i.e., it has a high rate of success (bugs found/test cases) a set of 20 test cases that finds 8 defects is more efficient than another set of 40 test cases that finds the same 8 defects.

For testing to be

Quality Assurance → Testing → Exploratory and Scripted Testing →

Here are two alternative approaches to testing a software: Scripted testing and Exploratory testing

-

Scripted testing: First write a set of test cases based on the expected behavior of the SUT, and then perform testing based on that set of test cases.

-

Exploratory testing: Devise test cases on-the-fly, creating new test cases based on the results of the past test cases.

Exploratory testing is ‘the simultaneous learning, test design, and test execution’

Here is an example thought process behind a segment of an exploratory testing session:

“Hmm... looks like feature x is broken. This usually means feature n and k could be broken too; we need to look at them soon. But before that, let us give a good test run to feature y because users can still use the product if feature y works, even if x doesn’t work. Now, if feature y doesn’t work 100%, we have a major problem and this has to be made known to the development team sooner rather than later...”

💡 Exploratory testing is also known as reactive testing, error guessing technique, attack-based testing, and bug hunting.

Exploratory Testing Explained, an online article by James Bach -- James Bach is an industry thought leader in software testing).

Scripted testing requires tests to be written in a scripting language; Manual testing is called exploratory testing.

A) False

Explanation: “Scripted” means test cases are predetermined. They need not be an executable script. However, exploratory testing is usually manual.

Which testing technique is better?

(e)

Explain the concept of exploratory testing using Minesweeper as an example.

When we test the Minesweeper by simply playing it in various ways, especially trying out those that are likely to be buggy, that would be exploratory testing.

Equivalence Partitioning

Can explain equivalence partitions

Consider the testing of the following operation.

isValidMonth(m) : returns true if m (and int) is in the range [1..12]

It is inefficient and impractical to test this method for all integer values [-MIN_INT to MAX_INT]. Fortunately, there is no need to test all possible input values. For example, if the input value 233 failed to produce the correct result, the input 234 is likely to fail too; there is no need to test both.

In general, most SUTs do not treat each input in a unique way. Instead, they process all possible inputs in a small number of distinct ways. That means a range of inputs is treated the same way inside the SUT. Equivalence partitioning (EP) is a test case design technique that uses the above observation to improve the E&E of testing.

Equivalence partition (aka equivalence class): A group of test inputs that are likely to be processed by the SUT in the same way.

By dividing possible inputs into equivalence partitions we can,

- avoid testing too many inputs from one partition. Testing too many inputs from the same partition is unlikely to find new bugs. This increases the efficiency of testing by reducing redundant test cases.

- ensure all partitions are tested. Missing partitions can result in bugs going unnoticed. This increases the effectiveness of testing by increasing the chance of finding bugs.

Can apply EP for pure functions

Equivalence partitions (EPs) are usually derived from the specifications of the SUT.

These could be EPs for the

- [MIN_INT ... 0] : below the range that produces

true(producesfalse) - [1 … 12] : the range that produces

true - [13 … MAX_INT] : above the range that produces

true(producesfalse)

isValidMonth(m) : returns true if m (and int) is in the range [1..12]

When the SUT has multiple inputs, you should identify EPs for each input.

Consider the method duplicate(String s, int n): String which returns a String that contains s repeated n times.

Example EPs for s:

- zero-length strings

- string containing whitespaces

- ...

Example EPs for n:

0- negative values

- ...

An EP may not have adjacent values.

Consider the method isPrime(int i): boolean that returns true if i is a prime number.

EPs for i:

- prime numbers

- non-prime numbers

Some inputs have only a small number of possible values and a potentially unique behavior for each value. In those cases we have to consider each value as a partition by itself.

Consider the method showStatusMessage(GameStatus s): String that returns a unique String for each of the possible value of s (GameStatus is an enum). In this case, each possible value for s will have to be considered as a partition.

Note that the EP technique is merely a heuristic and not an exact science, especially when applied manually (as opposed to using an automated program analysis tool to derive EPs). The partitions derived depend on how one ‘speculates’ the SUT to behave internally. Applying EP under a glass-box or gray-box approach can yield more precise partitions.

Consider the method EPs given above for the isValidMonth. A different tester might use these EPs instead:

- [1 … 12] : the range that produces

true - [all other integers] : the range that produces

false

Some more examples:

| Specification | Equivalence partitions |

|---|---|

|

|

[ |

|

|

[ |

Consider this SUT:

isValidName (String s): boolean

Description: returns true if s is not null and not longer than 50 characters.

A. Which one of these is least likely to be an equivalence partition for the parameter s of the isValidName method given below?

B. If you had to choose 3 test cases from the 4 given below, which one will you leave out based on the EP technique?

A. (d)

Explanation: The description does not mention anything about the content of the string. Therefore, the method is unlikely to behave differently for strings consisting of numbers.

B. (a) or (c)

Explanation: both belong to the same EP

Can apply EP for OOP methods

When deciding EPs of OOP methods, we need to identify EPs of all data participants that can potentially influence the behaviour of the method, such as,

- the target object of the method call

- input parameters of the method call

- other data/objects accessed by the method such as global variables. This category may not be applicable if using the black box approach (because the test case designer using the black box approach will not know how the method is implemented)

Consider this method in the DataStack class:

push(Object o): boolean

- Adds o to the top of the stack if the stack is not full.

- returns

trueif the push operation was a success. - throws

MutabilityExceptionif the global flagFREEZE==true.InvalidValueExceptionif o is null.

EPs:

DataStackobject: [full] [not full]o: [null] [not null]FREEZE: [true][false]

Consider a simple Minesweeper app. What are the EPs for the newGame() method of the Logic component?

As newGame() does not have any parameters, the only obvious participant is the Logic object itself.

Note that if the glass-box or the grey-box approach is used, other associated objects that are involved in the method might also be included as participants. For example, Minefield object can be considered as another participant of the newGame() method. Here, the black-box approach is assumed.

Next, let us identify equivalence partitions for each participant. Will the newGame() method behave differently for different Logic objects? If yes, how will it differ? In this case, yes, it might behave differently based on the game state. Therefore, the equivalence partitions are:

PRE_GAME: before the game starts, minefield does not exist yetREADY: a new minefield has been created and waiting for player’s first moveIN_PLAY: the current minefield is already in useWON,LOST: let us assume thenewGamebehaves the same way for these two values

Consider the Logic component of the Minesweeper application. What are the EPs for the markCellAt(int x, int y) method?. The partitions in bold represent valid inputs.

Logic: PRE_GAME, READY, IN_PLAY, WON, LOSTx: [MIN_INT..-1] [0..(W-1)] [W..MAX_INT] (we assume a minefield size of WxH)y: [MIN_INT..-1] [0..(H-1)] [H..MAX_INT]Cellat(x,y): HIDDEN, MARKED, CLEARED

Boundary Value Analysis

Can explain boundary value analysis

Boundary Value Analysis (BVA) is test case design heuristic that is based on the observation that bugs often result from incorrect handling of boundaries of equivalence partitions. This is not surprising, as the end points of the boundary are often used in branching instructions etc. where the programmer can make mistakes.

markCellAt(int x, int y) operation could contain code such as if (x > 0 && x <= (W-1)) which involves boundaries of x’s equivalence partitions.

BVA suggests that when picking test inputs from an equivalence partition, values near boundaries (i.e. boundary values) are more likely to find bugs.

Boundary values are sometimes called corner cases.

Boundary value analysis recommends testing only values that reside on the equivalence class boundary.

False

Explanation: It does not recommend testing only those values on the boundary. It merely suggests that values on and around a boundary are more likely to cause errors.

Can apply boundary value analysis

Typically, we choose three values around the boundary to test: one value from the boundary, one value just below the boundary, and one value just above the boundary. The number of values to pick depends on other factors, such as the cost of each test case.

Some examples:

| Equivalence partition | Some possible boundary values |

|---|---|

|

[1-12] |

0,1,2, 11,12,13 |

|

[MIN_INT, 0] |

MIN_INT, MIN_INT+1, -1, 0 , 1 |

|

[any non-null String] |

Empty String, a String of maximum possible length |

|

[prime numbers] |

No specific boundary |

|

[non-empty Stack] |

Stack with: one element, two elements, no empty spaces, only one empty space |

[W10.4] Other QA Techniques

Can explain software quality assurance

Software Quality Assurance (QA) is the process of ensuring that the software being built has the required levels of quality.

While testing is the most common activity used in QA, there are other complementary techniques such as static analysis, code reviews, and formal verification.

Can explain validation and verification

Quality Assurance = Validation + Verification

QA involves checking two aspects:

- Validation: are we building the right system i.e., are the requirements correct?

- Verification: are we building the system right i.e., are the requirements implemented correctly?

Whether something belongs under validation or verification is not that important. What is more important is both are done, instead of limiting to verification (i.e., remember that the requirements can be wrong too).

Choose the correct statements about validation and verification.

- a. Validation: Are we building the right product?, Verification: Are we building the product right?

- b. It is very important to clearly distinguish between validation and verification.

- c. The important thing about validation and verification is to remember to pay adequate attention to both.

- d. Developer-testing is more about verification than validation.

- e. QA covers both validation and verification.

- f. A system crash is more likely to be a verification failure than a validation failure.

(a)(b)(c)(d)(e)(f)

Explanation:

Whether something belongs under validation or verification is not that important. What is more important is that we do both.

Developer testing is more about bugs in code, rather than bugs in the requirements.

In QA, system testing is more about verification (does the system follow the specification?) and acceptance testings is more about validation (does the system solve the user’s problem?).

A system crash is more likely to be a bug in the code, not in the requirements.

Can explain code reviews

Code review is the systematic examination code with the intention of finding where the code can be improved.

Reviews can be done in various forms. Some examples below:

-

In

pair programming - As pair programming involves two programmers working on the same code at the same time, there is an implicit review of the code by the other member of the pair.

Pair Programming:

Pair programming is an agile software development technique in which two programmers work together at one workstation. One, the driver, writes code while the other, the observer or navigator, reviews each line of code as it is typed in. The two programmers switch roles frequently. [source: Wikipedia]

A good introduction to pair programming:

-

Pull Request reviews

- Project Management Platforms such as GitHub and BitBucket allows the new code to be proposed as Pull Requests and provides the ability for others to review the code in the PR.

-

Formal inspections

-

Inspections involve a group of people systematically examining a project artifacts to discover defects. Members of the inspection team play various roles during the process, such as:

- the author - the creator of the artifact

- the moderator - the planner and executor of the inspection meeting

- the secretary - the recorder of the findings of the inspection

- the inspector/reviewer - the one who inspects/reviews the artifact.

-

Advantages of code reviews over testing:

- It can detect functionality defects as well as other problems such as coding standard violations.

- Can verify non-code artifacts and incomplete code

- Do not require test drivers or stubs.

Disadvantages:

- It is a manual process and therefore, error prone.

Can explain static analysis

Static analysis: Static analysis is the analysis of code without actually executing the code.

Static analysis of code can find useful information such unused variables, unhandled exceptions, style errors, and statistics. Most modern IDEs come with some inbuilt static analysis capabilities. For example, an IDE can highlight unused variables as you type the code into the editor.

Higher-end static analyzer tools can perform for more complex analysis such as locating potential bugs, memory leaks, inefficient code structures etc.

Some example static analyzer for Java:

Linters are a subset of static analyzers that specifically aim to locate areas where the code can be made 'cleaner'.

Can explain formal verification

Formal verification uses mathematical techniques to prove the correctness of a program.

by Eric Hehner

Advantages:

- Formal verification can be used to prove the absence of errors. In contrast, testing can only prove the presence of error, not their absence.

Disadvantages:

- It only proves the compliance with the specification, but not the actual utility of the software.

- It requires highly specialized notations and knowledge which makes it an expensive technique to administer. Therefore, formal verifications are more commonly used in safety-critical software such as flight control systems.

Testing cannot prove the absence of errors. It can only prove the presence of errors. However, formal methods can prove the absence of errors.

True

Explanation: While using formal methods is more expensive than testing, it indeed can prove the correctness of a piece of software conclusively, in certain contexts. Getting such proof via testing requires exhaustive testing, which is not practical to do in most cases.

Project Milestone: mid-v1.3

Complete a draft of the DG and seek feedback from your tutor. Continue to enhance features. Make code RepoSense-compatible. Try doing a proper release.

Project Management:

- Continue to do deliberate project management using GitHub issue tracker, milestones, labels, etc. as you did in v1.2.

- 💡 We recommend that each PR also updates the relevant parts of documents and tests. That way, your documentation/testing work will not pile up towards the end.

- 💡 There is a way to get GitHub to auto-close the relevant issue when a PR is merged (example).

Ensure your code is

In previous semesters we asked students to annotate all their code using special @@author tags so that we can extract each student's code for grading. This semester, we are trying out a tool called RepoSense that is expected to reduce the need for such tagging, and also make it easier for you to see (and learn from) code written by others.

1. View the current status of code authorship data:

- The report generated by the tool is available at Project Code Dashboard. The feature that is most relevant to you is the Code Panel (shown on the right side of the screenshot above). It shows the code attributed to a given author. You are welcome to play around with the other features (they are still under development and will not be used for grading this semester).

- Click on your name to load the code attributed to you (based on Git blame/log data) onto the code panel on the right.

- If the code shown roughly matches the code you wrote, all is fine and there is nothing for you to do.

2. If the code does not match:

-

Here are the possible reasons for the code shown not to match the code you wrote:

- the git username in some of your commits does not match your GitHub username (perhaps you missed our instructions to set your Git username to match GitHub username earlier in the project, or GitHub did not honor your Git username for some reason)

- the actual authorship does not match the authorship determined by git blame/log e.g., another student touched your code after you wrote it, and Git log attributed the code to that student instead

-

In those cases,

- Install RepoSense (see the Getting Started section of the RepoSense User Guide)

- Use the two methods described in the RepoSense User Guide section Configuring a Repo to Provide Additional Data to RepoSense to provide additional data to the authorship analysis to make it more accurate.

- If you add a

config.jsonfile to your repo (as specified by one of the two methods),- Please use the template json file given in the module website so that your display name matches the name we expect it to be.

- If your commits have multiple author names, specify all of them e.g.,

"authorNames": ["theMyth", "theLegend", "theGary"] - Update the line

config.jsonin the.gitignorefile of your repo as/config.jsonso that it ignores theconfig.jsonproduced by the app but not the_reposense/config.json.

- If you add

@@authorannotations, please follow the guidelines below:

Adding @@author tags indicate authorship

-

Mark your code with a

//@@author {yourGithubUsername}. Note the double@.

The//@@authortag should indicates the beginning of the code you wrote. The code up to the next//@@authortag or the end of the file (whichever comes first) will be considered as was written by that author. Here is a sample code file://@@author johndoe method 1 ... method 2 ... //@@author sarahkhoo method 3 ... //@@author johndoe method 4 ... -

If you don't know who wrote the code segment below yours, you may put an empty

//@@author(i.e. no GitHub username) to indicate the end of the code segment you wrote. The author of code below yours can add the GitHub username to the empty tag later. Here is a sample code with an emptyauthortag:method 0 ... //@@author johndoe method 1 ... method 2 ... //@@author method 3 ... method 4 ... -

The author tag syntax varies based on file type e.g. for java, css, fxml. Use the corresponding comment syntax for non-Java files.

Here is an example code from an xml/fxml file.<!-- @@author sereneWong --> <textbox> <label>...</label> <input>...</input> </textbox> ... -

Do not put the

//@@authorinside java header comments.

👎/** * Returns true if ... * @@author johndoe */👍

//@@author johndoe /** * Returns true if ... */

What to and what not to annotate

-

Annotate both functional and test code There is no need to annotate documentation files.

-

Annotate only significant size code blocks that can be reviewed on its own e.g., a class, a sequence of methods, a method.

Claiming credit for code blocks smaller than a method is discouraged but allowed. If you do, do it sparingly and only claim meaningful blocks of code such as a block of statements, a loop, or an if-else statement.- If an enhancement required you to do tiny changes in many places, there is no need to annotate all those tiny changes; you can describe those changes in the Project Portfolio page instead.

- If a code block was touched by more than one person, either let the person who wrote most of it (e.g. more than 80%) take credit for the entire block, or leave it as 'unclaimed' (i.e., no author tags).

- Related to the above point, if you claim a code block as your own, more than 80% of the code in that block should have been written by yourself. For example, no more than 20% of it can be code you reused from somewhere.

- 💡 GitHub has a blame feature and a history feature that can help you determine who wrote a piece of code.

-

Do not try to boost the quantity of your contribution using unethical means such as duplicating the same code in multiple places. In particular, do not copy-paste test cases to create redundant tests. Even repetitive code blocks within test methods should be extracted out as utility methods to reduce code duplication. Individual members are responsible for making sure code attributed to them are correct. If you notice a team member claiming credit for code that he/she did not write or use other questionable tactics, you can email us (after the final submission) to let us know.

-

If you wrote a significant amount of code that was not used in the final product,

- Create a folder called

{project root}/unused - Move unused files (or copies of files containing unused code) to that folder

- use

//@@author {yourGithubUsername}-unusedto mark unused code in those files (note the suffixunused) e.g.

//@@author johndoe-unused method 1 ... method 2 ...Please put a comment in the code to explain why it was not used.

- Create a folder called

-

If you reused code from elsewhere, mark such code as

//@@author {yourGithubUsername}-reused(note the suffixreused) e.g.//@@author johndoe-reused method 1 ... method 2 ... -

You can use empty

@@authortags to mark code as not yours when RepoSense attribute the to you incorrectly.-

Code generated by the IDE/framework, should not be annotated as your own.

-

Code you modified in minor ways e.g. adding a parameter. These should not be claimed as yours but you can mention these additional contributions in the Project Portfolio page if you want to claim credit for them.

-

- After you are satisfied with the new results (i.e., results produced by running RepoSense locally), push the

config.jsonfile you added and/or the annotated code to your repo. We'll use that information the next time we run RepoSense (we run it at least once a week). - If you choose to annotate code, please annotate code chunks not smaller than a method. We do not grade code snippets smaller than a method.

- If you encounter any problem when doing the above or if you have questions, please post in the forum.

We recommend you ensure your code is RepoSense-compatible by v1.3

Product:

-

Do a

proper product release as described in the Developer Guide. You can name it something likev1.2.1. Ensure that the jar file works as expected by doing some manual testing. Reason: You are required to do a proper product release for v1.3. Doing a trial at this point will help you iron out any problems in advance. It may take additional effort to get the jar working especially if you use third party libraries or additional assets such as images.

Documentation:

- User Guide: Update where the document does not match the current product.

- Developer Guide: Similar to the User Guide.

Questions to discuss during tutorial: Divide these questions among team members. Be prepared to answer questions allocated to you.

Q1

- What is Liskov Substitution Principle?

Give an example from the project where LSP is followed and explain how to change the code to break LSP. - Explain how Law of Demeter affects coupling

a. Add a line to this code that violates LoDvoid foo(P p){ //... } - Give an example in the project code that violates the Law of Demeter.

Q2

- Explain and justify: testing should be efficient and effective

- Explain how exploratory and scripted testing is used in AB4/project

- Give an example of a negative test case in AB4/project

- Explain grey-box test case design

Q3

- Explain: Equivalence Partition improve E&E of testing

- What are the EPs for the parameter

dayof this method/** * Returns true if the three values represent a valid day */ boolean isValidDay(int year, int month, int day){ }

Q4

- Explain: Boundary Value Analysis improves E&E of testing

- What are the boundary values for the parameter

dayin the question above? - How can EP and BVA heuristics be used in AB4/project? Hint: See [AB4 Learning Outcomes: LO-TestCaseDesignHeuristics]

- How do you ensure some clean up code is run after each JUnit test case?

Q5

- What is test coverage? How is it used in AB4?

- How to measure coverage in Intellij?

- What’s the difference between validation and verification?

Acceptance tests are validation tests or verification tests? - Give an example of static analysis being used in Intellij

Complete a draft of the DG and seek feedback from your tutor. Continue to enhance features. Make code RepoSense-compatible. Try doing a proper release.

Project Management:

- Continue to do deliberate project management using GitHub issue tracker, milestones, labels, etc. as you did in v1.2.

- 💡 We recommend that each PR also updates the relevant parts of documents and tests. That way, your documentation/testing work will not pile up towards the end.

- 💡 There is a way to get GitHub to auto-close the relevant issue when a PR is merged (example).

Ensure your code is

In previous semesters we asked students to annotate all their code using special @@author tags so that we can extract each student's code for grading. This semester, we are trying out a tool called RepoSense that is expected to reduce the need for such tagging, and also make it easier for you to see (and learn from) code written by others.

1. View the current status of code authorship data:

- The report generated by the tool is available at Project Code Dashboard. The feature that is most relevant to you is the Code Panel (shown on the right side of the screenshot above). It shows the code attributed to a given author. You are welcome to play around with the other features (they are still under development and will not be used for grading this semester).

- Click on your name to load the code attributed to you (based on Git blame/log data) onto the code panel on the right.

- If the code shown roughly matches the code you wrote, all is fine and there is nothing for you to do.

2. If the code does not match:

-

Here are the possible reasons for the code shown not to match the code you wrote:

- the git username in some of your commits does not match your GitHub username (perhaps you missed our instructions to set your Git username to match GitHub username earlier in the project, or GitHub did not honor your Git username for some reason)

- the actual authorship does not match the authorship determined by git blame/log e.g., another student touched your code after you wrote it, and Git log attributed the code to that student instead

-

In those cases,

- Install RepoSense (see the Getting Started section of the RepoSense User Guide)

- Use the two methods described in the RepoSense User Guide section Configuring a Repo to Provide Additional Data to RepoSense to provide additional data to the authorship analysis to make it more accurate.

- If you add a

config.jsonfile to your repo (as specified by one of the two methods),- Please use the template json file given in the module website so that your display name matches the name we expect it to be.

- If your commits have multiple author names, specify all of them e.g.,

"authorNames": ["theMyth", "theLegend", "theGary"] - Update the line

config.jsonin the.gitignorefile of your repo as/config.jsonso that it ignores theconfig.jsonproduced by the app but not the_reposense/config.json.

- If you add

@@authorannotations, please follow the guidelines below:

Adding @@author tags indicate authorship

-

Mark your code with a

//@@author {yourGithubUsername}. Note the double@.

The//@@authortag should indicates the beginning of the code you wrote. The code up to the next//@@authortag or the end of the file (whichever comes first) will be considered as was written by that author. Here is a sample code file://@@author johndoe method 1 ... method 2 ... //@@author sarahkhoo method 3 ... //@@author johndoe method 4 ... -

If you don't know who wrote the code segment below yours, you may put an empty

//@@author(i.e. no GitHub username) to indicate the end of the code segment you wrote. The author of code below yours can add the GitHub username to the empty tag later. Here is a sample code with an emptyauthortag:method 0 ... //@@author johndoe method 1 ... method 2 ... //@@author method 3 ... method 4 ... -

The author tag syntax varies based on file type e.g. for java, css, fxml. Use the corresponding comment syntax for non-Java files.

Here is an example code from an xml/fxml file.<!-- @@author sereneWong --> <textbox> <label>...</label> <input>...</input> </textbox> ... -

Do not put the

//@@authorinside java header comments.

👎/** * Returns true if ... * @@author johndoe */👍

//@@author johndoe /** * Returns true if ... */

What to and what not to annotate

-

Annotate both functional and test code There is no need to annotate documentation files.

-

Annotate only significant size code blocks that can be reviewed on its own e.g., a class, a sequence of methods, a method.

Claiming credit for code blocks smaller than a method is discouraged but allowed. If you do, do it sparingly and only claim meaningful blocks of code such as a block of statements, a loop, or an if-else statement.- If an enhancement required you to do tiny changes in many places, there is no need to annotate all those tiny changes; you can describe those changes in the Project Portfolio page instead.

- If a code block was touched by more than one person, either let the person who wrote most of it (e.g. more than 80%) take credit for the entire block, or leave it as 'unclaimed' (i.e., no author tags).

- Related to the above point, if you claim a code block as your own, more than 80% of the code in that block should have been written by yourself. For example, no more than 20% of it can be code you reused from somewhere.

- 💡 GitHub has a blame feature and a history feature that can help you determine who wrote a piece of code.

-

Do not try to boost the quantity of your contribution using unethical means such as duplicating the same code in multiple places. In particular, do not copy-paste test cases to create redundant tests. Even repetitive code blocks within test methods should be extracted out as utility methods to reduce code duplication. Individual members are responsible for making sure code attributed to them are correct. If you notice a team member claiming credit for code that he/she did not write or use other questionable tactics, you can email us (after the final submission) to let us know.

-

If you wrote a significant amount of code that was not used in the final product,

- Create a folder called

{project root}/unused - Move unused files (or copies of files containing unused code) to that folder

- use

//@@author {yourGithubUsername}-unusedto mark unused code in those files (note the suffixunused) e.g.

//@@author johndoe-unused method 1 ... method 2 ...Please put a comment in the code to explain why it was not used.

- Create a folder called

-

If you reused code from elsewhere, mark such code as

//@@author {yourGithubUsername}-reused(note the suffixreused) e.g.//@@author johndoe-reused method 1 ... method 2 ... -

You can use empty

@@authortags to mark code as not yours when RepoSense attribute the to you incorrectly.-

Code generated by the IDE/framework, should not be annotated as your own.

-

Code you modified in minor ways e.g. adding a parameter. These should not be claimed as yours but you can mention these additional contributions in the Project Portfolio page if you want to claim credit for them.

-

- After you are satisfied with the new results (i.e., results produced by running RepoSense locally), push the

config.jsonfile you added and/or the annotated code to your repo. We'll use that information the next time we run RepoSense (we run it at least once a week). - If you choose to annotate code, please annotate code chunks not smaller than a method. We do not grade code snippets smaller than a method.

- If you encounter any problem when doing the above or if you have questions, please post in the forum.

We recommend you ensure your code is RepoSense-compatible by v1.3

Product:

-

Do a

proper product release as described in the Developer Guide. You can name it something likev1.2.1. Ensure that the jar file works as expected by doing some manual testing. Reason: You are required to do a proper product release for v1.3. Doing a trial at this point will help you iron out any problems in advance. It may take additional effort to get the jar working especially if you use third party libraries or additional assets such as images.

Documentation:

- User Guide: Update where the document does not match the current product.

- Developer Guide: Similar to the User Guide.